About

- This ACIP GRADE handbook provides guidance to the ACIP workgroups on how to use the GRADE approach for assessing the certainty of evidence.

Summary

The evidence base must be identified and retrieved systematically before the Grading of Recommendations, Assessment, Development and Evaluation (GRADE) approach is used to assess the certainty of the evidence and provide support for guideline judgements. A systematic review should be used to retrieve the best available evidence related to the Population, Intervention, Comparison, and Outcomes (PICO) question. All guidelines should be preceded by a systematic review to ensure that recommendations and judgements are supported by an extensive body of evidence that addresses the research question. This section provides an overview of the systematic review process, external to the GRADE assessment of the certainty of evidence.

Systematic methods should be used to identify and synthesize the evidence1. In contrast to narrative reviews, systematic methods address a specific question and apply a rigorous scientific approach to the selection, appraisal, and synthesis of relevant studies. A systematic approach requires documentation of the search strategy used to identify all relevant published and unpublished studies and the eligibility criteria for the selection of studies. Systematic methods reduce the risk of selective citation and improve the reliability and accuracy of decisions. The Cochrane handbook provides guidance on searching for studies, including gray literature and unpublished studies (Chapter 4: Searching for and selecting studies)1.

6.1 Identifying the evidence

Guidelines should be based on a systematic review of the evidence23. A published systematic review can be used to inform the guideline, or a new one can be conducted. The benefits of identifying a previously conducted systematic review include reduced time and resources of conducting a review from scratch3. Additionally, if a Cochrane or other well-done systematic review exists on the topic of interest, the evidence is likely presented in a well-structured format and meets certain quality standards, thus providing a good evidence foundation for guidelines. As a result, systematic reviews do not need to be developed de novo if a high-quality review of the topic exists. Updating a relevant and recent high-quality review is usually less expensive and requires less time than conducting a review de novo. Databases, such as the Cochrane library, Medline (through PubMed or OVID), and EMBASE can be searched to identify existing systematic reviews which address the PICO question of interest. Additionally, the International Prospective Register of Systematic Reviews (PROSPERO) database can be searched to check for completed or on-going systematic reviews addressing the research question of interest3. It's important to base an evidence assessment and recommendations on a well-done systematic review to avoid any potential for bias to be introduced into the review, such as the inability to replicate methods or exclusion of relevant studies. Assessing the quality of a published systematic review can be done using the A Measurement Tool to Assess systematic Reviews (AMSTAR 2) instrument3. This instrument assesses the presence of the following characteristics in the review: relevancy to the PICO question; deviations from the protocol; study selection criteria; search strategy; data extraction process; risk of bias assessments for included studies; and appropriateness of both quantitative and qualitative synthesis4. A Risk of Bias of Systematic Reviews (ROBIS) assessment may also be performed5.

If a well-done systematic review is identified but the date of the last search is more than 6-12 months old, consider updating the search from the last date to ensure that all available evidence is captured to inform the guideline. In a well-done published systematic review, the search strategy will be provided, possibly as an online appendix or supplementary materials. Refer to the Evidence Retrieval section (6.3) for more information.

If a well-done published systematic review is not identified, then a de novo systematic review must be conducted. Once the PICO question(s) have been identified, conducting a systematic review includes the following steps:

- Protocol development

- Evidence retrieval and identification

- Risk of bias assessment

- A meta-analysis or narrative synthesis

- Assessment of the certainty of evidence using GRADE

6.2 Protocol development

There are several in-depth resources available to support authors when developing a systematic review; therefore, this and following sections will refer to higher-level points and provide information on those resources. The Cochrane Handbook serves as a fundamental reference for the development of systematic reviews and the PRISMA guidance provides detailed information on reporting requirements. To improve transparency and reduce the potential for bias to be introduced into the systematic review process, a protocol should be developed a priori to outline the methods of the planned systematic review. If the methods in the final systematic review deviate from the protocol (as is not uncommon), this must be noted in the final review with a rationale. Protocol development aims to reduce potential bias and ensure transparency in the decisions and judgements made by the review team. Protocols should document the predetermined PICO and study inclusion/exclusion criteria without the influence of the outcomes available in published primary studies6. The Preferred Reporting Items for Systematic review and Meta-Analysis Protocols (PRISMA-P) framework can be used to guide the development of a systematic review7. Details on the PRISMA-P statement and checklist are available at https://www.prisma-statement.org/protocols.7 If the intention is to publish the systematic review in a peer-reviewed journal separately from the guideline, consider registering the systematic review using PROSPERO before beginning the systematic review process8.

To ensure the review is done well and meets the needs of the guideline authors, it is important to consider what type of evidence will be searched and included at the protocol stage before the evidence is retrieved9. While randomized controlled trials (RCTs) are often considered gold standards for evidence, there are many reasons why authors will choose to include nonrandomized studies (NRS) in their searches:

- To address baseline risks

- When RCTs aren't feasible, ethical or readily available

- When it is predicted that RCTs will have very serious concerns with indirectness (Refer to Table 12 for more information about Indirectness)

NRS can serve as complementary, sequential, or replacement evidence to RCTs depending on the situation10. Section 9 of this handbook provides detailed information about how to integrate NRS evidence. At the protocol stage it is important to consider whether or not NRS should be included.

The systematic review team will scope the available literature to develop a sense of whether or not the systematic review should be limited to RCTs alone or if a reliance on NRS may also be necessary. Once this inclusion and exclusion criteria has been established, the literature can be searched and retrieved systematically.

6.3 Evidence retrieval and identification

6.3a. Searching databases

An expert librarian or information specialist should be consulted to create a search strategy that is applied to all relevant databases to gather primary literature1. The following databases are widely used when conducting a systematic review: MEDLINE (via PubMed or OVID); EMBASE; Cochrane Central Register of Controlled Trials (CENTRAL). The details of each strategy as actually performed, with search terms (keywords and/or Medical Subject Headings/MESH terms) the date(s) on which the search was conducted and/or updated; and the publication dates of the literature covered, should be recorded.

In addition to searching for evidence, references from studies included for the review should also be examined to add anything relevant missed by the searches. It is also useful to examine clinical trials registries maintained by the federal government (www.clinicaltrials.gov) and vaccine manufacturers, and to consult subject matter experts. Ongoing studies should be recorded as well so that if the review or guideline were to be updated, these studies can be assessed for inclusion.

6.3b. Screening to identify eligible studies

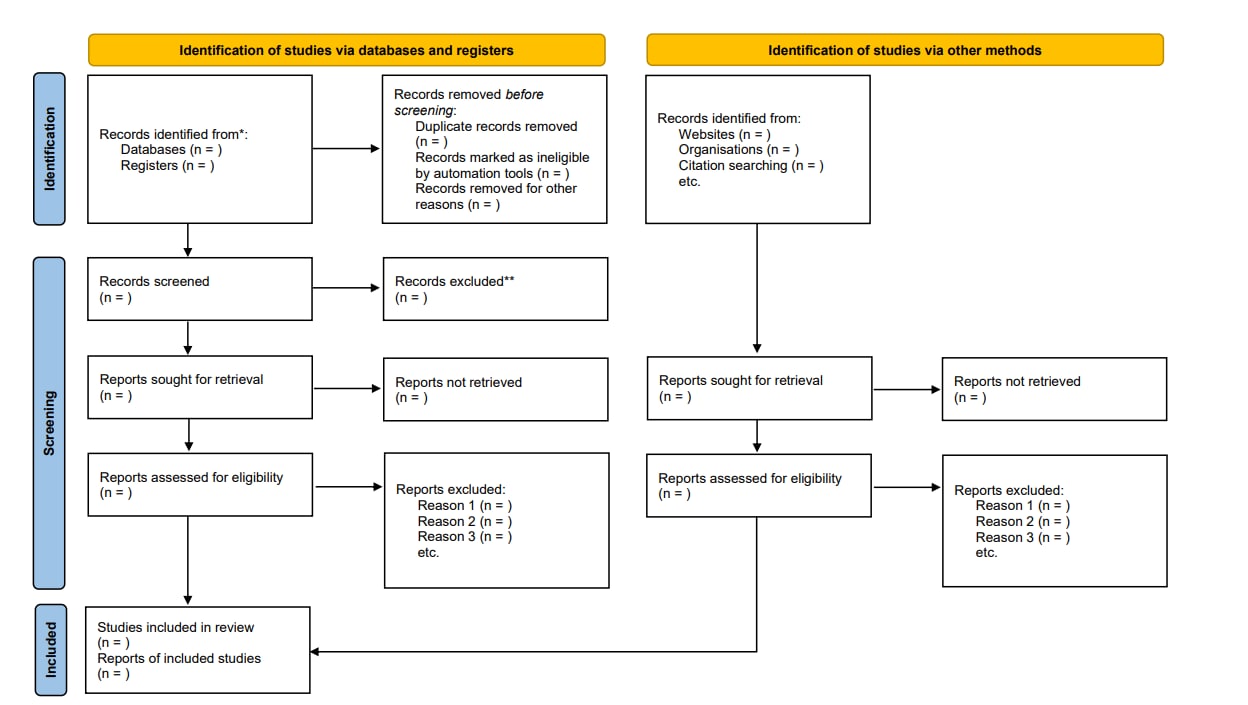

The criteria for including/excluding evidence identified by the search, and the reasons for including and excluding evidence should be described (e.g., population characteristics, intervention, comparison, outcomes, study design, setting, language). Screening is typically conducted independently and in duplicate by at least two reviewers. Title and abstract screening is done first based on broader eligibility criteria and once relevant abstracts are selected, the full texts of those papers are pulled. The full-text screening is also usually conducted by two reviewers, independently and in duplicate with a more specific eligibility criteria to decide if the paper answers the PICO question or not. At both the title and abstract, and at the full-text stages, disagreements between reviewers can be resolved through discussion or involvement of a third reviewer. The goal of the screening process is to sort through the literature and select the most relevant studies for the review. To organize and conduct the systematic review, Covidence can be used to better manage each of the steps of the screening process. Other programs, such as DistillerSR or Rayyan can also be used to manage the screening process1112. The PRISMA Statement (www.prisma-statement.org) includes guidance on reporting the methods for evidence retrieval. A PRISMA flow diagram (Figure 3) presents the systematic review search process and results.

Figure 3. PRISMA flow diagram depicting the flow of information through the different phases of the systematic review evidence retrieval process, including the number of records identified, records included and excluded at each stage, and the reasons for exclusions.

References in this figure:13

*Consider, if feasible to do so, reporting the number of records identified from each database or register searched (rather than the total number across all databases/registers).

**If automation tools were used, indicate how many records were excluded by a human and how many were excluded by automation tools.

From: Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. doi: 10.1136/bmj.n71. For more information, visit: http://www.prisma-statement.org/

6.3c. Data extraction

Once included articles have been screened and selected, relevant information from the articles should be extracted systematically using a standardized and pilot-tested data extraction form. Table 3 provides an example of an ACIP data extraction form (data fields may differ by topic and scope); Microsoft Excel can be used to keep track of and extract relevant details about each study. Data extraction forms typically capture information about: 1) study details (author, publication year, title, funding source, etc.); 2) study characteristics (study design, geographical location, population, etc.); 3) study population (demographics, disease severity, etc.); 4) intervention and comparisons (e.g., type of vaccine/placebo/control, dose, number in series, etc.); 5) outcome measures. For example, for dichotomously reported outcomes, the number of people with the outcome per study arm and the total number of people in each study arm are noted. In contrast, for continuous outcomes, the total number of people in each study arm, the mean or median, as well as standard deviation or standard error are extracted. This is the information needed to conduct a quantitative synthesis. If this information is not provided in the study, reviewers may want to reach out to the authors for more information or contact a statistician about alternative approaches to quantifying data. After extracting the studies, risk of bias should be assessed using an appropriate tool described in Section 8.1 of this handbook.

Table 3. Example of a data extraction form for included studies

| Author, Year | Name of reviewer | Date completed | Study characteristics | Participants | Interventions | Outcomes | Other fields | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Study design | Number of participants enrolled* | Number of participants analyzed* | Loss to follow up (for each outcome) | Country | Age | Sex (% female) | Race/ Ethnicity | Inclusion criteria | Exclusion criteria | Equivalence of baseline characteristics | Intervention arm Dose Duration Cointerventions | Comparison arm Dose Duration Cointerventions | Dichotomous: intervention arm n event/N, control arm n event/N

Continuous: Intervention arm: Mean, SD, N, Control arm: Mean, SD, N

|

Type of study (published/ unpublished) | Funding source | Study period | Reported subgroup analyses | |||

*total and per group

6.4 Conducting the meta-analysis

After the data has been retrieved, if appropriate, it can be statistically combined to produce a pooled estimate of the relative (e.g., risk ratio, odds ratio, hazard ratio) or absolute (e.g., mean difference, standard mean difference) effect for the body of evidence of each outcome. A meta-analysis can be performed when there are at least two studies that report on the same outcome. Several software programs are available that can be used to perform a meta-analysis, including R, STATA, and Review Manager (RevMan).

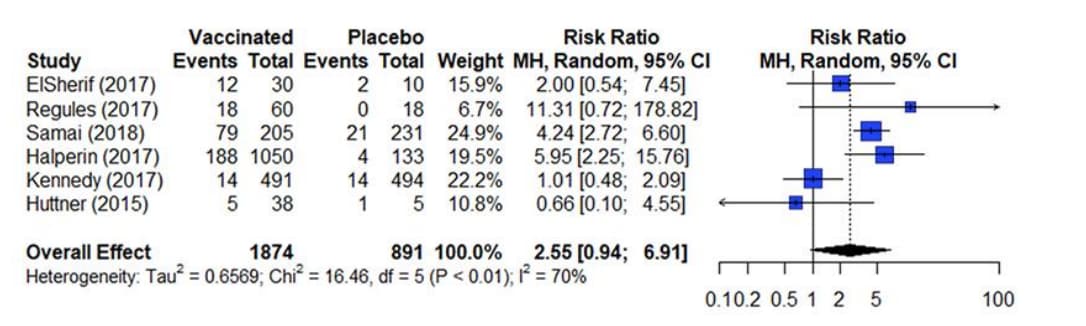

The results from a meta-analysis are presented in a forest plot as presented in figure 4. A forest plot presents the effect estimates and confidence intervals for each individual study and a pooled estimate of all the studies included in the meta-analysis14. The square represents the effect estimate and the horizontal line crossing the square is indicative of the confidence interval (CI; typically 95% CI). The area the square covers reflects the weight given to the study in the analysis. The summary result is presented as a diamond at the bottom.

Figure 4. Estimates of effect for RCTs included in analysis for outcome of incidence of arthralgia (0-42 days)

References in this figure:15

The two most popular statistical methods for conducting meta-analyses are the fixed-effects model and the random-effects model14. These two models typically generate similar effect estimates when used in meta-analyses. However, these models are not interchangeable, and each model makes a different assumption about the data being analyzed.

A fixed-effects model assumes that there is one true effect size that can be identified across all included studies; therefore, all observed differences between studies are attributed to sampling error. The fixed effect model is used when all the studies are assumed to share a common effect size16. Before using the fixed-effect model in a meta-analysis, consideration should be made as to whether the results will be applied to only the included studies. Since the fixed-effect model provides the pooled effect estimate for the population in the studies included in the analysis, it should not be used if the goal is to generalize the estimate to other populations.

In contrast, a random-effects model, some variability between the true effect sizes studies is accepted. These effect sizes are assumed to follow a normal distribution. The confidence intervals generated by the random-effects model are typically wider than those generated by the fixed-effect model, as they recognize that some variability in the findings can be due to differences between the primary studies. The weights of the studies are also more similar under the random-effects model. When variations in, for example, the participants or methods across different included studies is suspected, it is suggested to use a random-effects model. This is because the studies are weighed more evenly than the fixed effect model. The majority of analyses will meet the criteria to use a random effects mode. One caveat about the selection of models: when the number of studies included in the analysis is few (<3), the random-effects model will produce an estimate of variance with poor precision. In this situation, a fixed effect model will be a more appropriate way to conduct the meta-analysis17.

- Lefebvre C, Glanville J, Briscoe S, et al. Chapter 4: Searching for and selecting studies. In: Higgins J, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions version 63 (updated February 2022). Cochrane; 2022. www.training.cochrane.org/handbook.

- Committee on Standards for Developing Trustworthy Clinical Practice Guidelines BoHCS, Institute of Medicine. Clinical Practice Guidelines We Can Trust. National Academies Press; 2011.

- World Health Organization. WHO handbook for guideline development, 2nd ed. 2014: World Health Organization. 167.

- Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017/09/21/ 2017:j4008. doi:10.1136/bmj.j4008

- Bristol Uo. ROBIS tool.

- Lasserson T, Thomas J, Higgins J. Chapter 1: Starting a review. In: Higgins J, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of Interventions version 63. 2022. www.training.cochrane.org/handbook

- Moher D, Shamseer L, Clarke M, et al. Preferred reporting items for systematic review and meta analysis protocols (PRISMA-P) 2015 statement. Syst Rev. Jan 1 2015;4:1. doi:10.1186/2046- 4053-4-1

- PROSPERO. York.ac.uk. https://www.crd.york.ac.uk/PROSPERO/

- Cuello-Garcia CA, Santesso N, Morgan RL, et al. GRADE guidance 24 optimizing the integration of randomized and non-randomized studies of interventions in evidence syntheses and health guidelines. J Clin Epidemiol. 2022/02// 2022;142:200-208. doi:10.1016/j.jclinepi.2021.11.026

- Schünemann HJ, Tugwell P, Reeves BC, et al. Non-randomized studies as a source of complementary, sequential or replacement evidence for randomized controlled trials in systematic reviews on the effects of interventions. Research Synthesis Methods. 2013 2013;4(1):49-62. doi:10.1002/jrsm.1078

- DistillerSR | Systematic Review and Literature Review Software. DistillerSR.

- Rayyan – Intelligent Systematic Review. https://www.rayyan.ai/

- Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi:10.1136/bmj.n71

- Deeks J, Higgins J, Altman D. Chapter 10: Analysing data and undertaking meta-analyses. In: Higgins J, Thomas J, Chandler J, et al, eds. Cochrane Handbook for Systematic Reviews of v.04_2024 20 Interventions version 63 (updated February 2022). Cochrane; 2022. www.training.cochrane.org/handbook.

- Choi MJ, Cossaboom CM, Whitesell AN, et al. Use of ebola vaccine: recommendations of the Advisory Committee on Immunization Practices, United States, 2020. MMWR Recommendations and Reports. 2021;70(1):1.

- Borenstein M, Hedges LV, Higgins JP, Rothstein HR. Introduction to meta-analysis. John Wiley & Sons; 2021.

- Borenstein M, Hedges LV, Higgins JP, Rothstein HR. A basic introduction to fixed-effect and random-effects models for meta-analysis. Research Synthesis Methods. 2010;1:97-111. doi:DOI: 10.1002/jrsm.12