Key points

- Collaboratively engage with interest holders to focus the evaluation efforts and develop the most appropriate evaluation design.

- Purpose statement

- Types of evaluation to be conducted

- List of intended users and uses of findings

- List of evaluation questions

- Description of the overarching evaluation design

Overview and Importance

Step 3 of the Evaluation Framework identifies what aspects of the program to evaluate. It uses the priorities and information needs from interest holders identified in Step 1 and the logic model from Step 2 to focus the evaluation questions and design. Step 3 prioritizes what is important to understand and identifies an evaluation design that is sensitive, relevant, and not harmful to the community. It also accommodates the program context and resources while also incorporating relevant evaluation standards.

Refer to the full-length CDC Program Evaluation Action Guide for additional information, examples and worksheets to apply the concepts discussed in this step.

Develop Evaluation Purpose Statement

Before conducting an evaluation, identifying its main goal or purpose sets the tone for how the evaluation will be conducted. The evaluation purpose statement can be influenced by the following:

Intended users of the evaluation are people who have a specific interest in the evaluation and a clear use in mind. This can include funders, program implementers, or community members. Key Questions to Consider:

- Who will use and/or act on the evaluation findings?

Intended uses of the evaluation are ways in which the intended users plan to use the knowledge and findings gained from the evaluation. Key Questions to Consider:

- What information is important to interest holders?

- What do they want to gain from the evaluation?

- When are evaluation findings needed?

Program components are the contextual pieces of program information used to assess evaluation readiness. Key Questions to Consider:

- What is the stage of development of the program (planning, implementation, or maintenance)?

- What is the overall context?

- Which components of the program are best suited for evaluation?

An assessment of the resources available for the evaluation. Key Questions to Consider:

- How much resources (i.e., time, money, effort) are needed for the evaluation?

- What financial resources are available?

- How much staff time will be needed?

Determine the Type of Evaluation

The evaluation purpose provides clarity on the type of evaluation to conduct. The most common types of evaluations include:

Formative Evaluation

Identifies whether the proposed program is feasible, appropriate, and acceptable to the population of reach before it is fully implemented1.

- When to use it? During the development of a new program, policy, or organizational approach, when an existing program is being modified or used in a new setting, or when you want to focus on the who, what, when, where and how.

- Why is it useful? Allows for modifications before full implementation begins.

Process/Implementation Evaluation

Assesses how well the program is being implemented as planned1.

- When to use it? At the beginning of program implementation or during operations (implementation phase) of an existing program.

- Why is it useful? It provides early identification of gaps or issues that can be addressed early. Allows for programs to monitor activity efficiency.

Outcome Evaluation

Measures how well a program, policy or organization has achieved its intended outcomes. It cannot determine what caused specific outcomes (causality), only whether they have been achieved1.

- When to use it? After the program has been implemented.

- Why is it useful? Identifies whether the program is achieving its goals.

Impact Evaluation

Compares the outcomes of a program, policy or organization to estimates of what the outcomes would have been without it. Usually seeks to determine whether activities caused the observed outcomes1.

- When to use it? At the end of a program or during maintenance phase of a program at appropriate times.

- Why is it useful? It provides evidence for use in policy and funding decisions.

Economic Evaluation

Examines programmatic effects relative to program costs1.

- When to use it? At the beginning of a program, or during maintenance phase of a program or at the end of a program.

- Why is it useful? Assesses program effects relative to the cost to produce them.

Developing Evaluation Questions

Evaluation questions identify the aspects of the program that will be investigated2. These questions are usually broad in scope, open-ended, and either process or outcome focused. They help to define the boundaries of the evaluation that align with its purpose, intended uses, information needs, and interest holder priorities.

Developing and prioritizing evaluation questions will depend on several factors:

- Interest holder priorities and when information is needed.

- Appropriate fit within the program's description, goals, and stage of development.

- Relevance with evaluation purpose and providing information useful to interest holders.

- Resource availability in time, funding, and staffing.

- Timeline for conducting the evaluation.

- Availability of similar insights from prior evaluations or other evidence activities.

- Attributes or factors that facilitate achieving optimal health.

In addition to developing and prioritizing the evaluation questions, assessing the effectiveness and appropriateness of the evaluation questions also is important.

Determine Evaluation Design

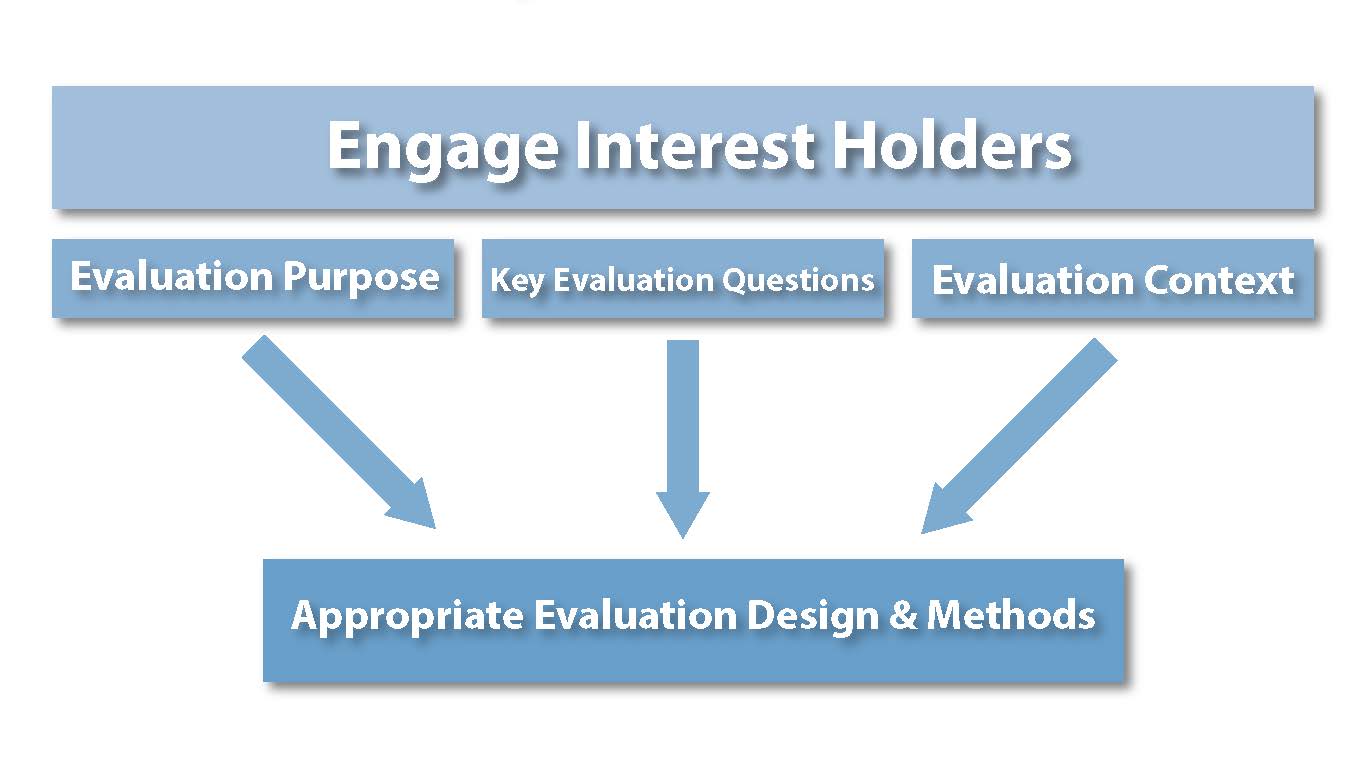

The evaluation design is the overarching structure for the evaluation. Selecting the appropriate evaluation design is guided by the evaluation purpose, key evaluation questions, and evaluation context, including available resources and constraints.

The figure above demonstrates that interest holder engagement influences the evaluation purpose, key evaluation questions, and the understanding of the evaluation context. Collaboratively engaging interest holders gives the evaluation credibility and ensures that the selected design will be able to answer the key evaluation questions with scientific integrity within the real-world constraints.

There are three types of scientific designs used in evaluation.

Experimental:

Units of study are randomly assigned to a control group or a treatment/ experimental group3.

- Why use it? Able to demonstrate causal relationship between activities and outcomes. Considered the "gold standard" and rigorous but may not always be practical due to costs. It also may not be ethical to randomly assign people if the program or intervention involves treatment or another service.

- Example: Randomized controlled trial (RCT)

Quasi-experimental:

Compare between non-equivalent groups that are not randomly assigned4.

- Why use it? Enables experimentation when random assignment isn't possible. Additional analyses are needed to control for extraneous factors that can influence findings.

- Example: Time series

Observational:

Does not use comparison or control groups5.

- Why use it? Simple design and may require less resources to conduct, but it cannot infer causality.

- Example: Case studies or cross-sectional studies

Applying Cross-Cutting Actions and the Evaluation Standards

As with the other evaluation framework steps, it is important to integrate the evaluation standards and cross-cutting actions when focusing the evaluation questions and design in Step 3. See Table 6 in the CDC Program Evaluation Framework, 2024 for key questions for integrating the evaluation standards and cross-cutting actions for Step 3.

- Salabarría-Peña, Y., Apt, B.S., Walsh, C.M. (2007). Practical Use of Program Evaluation among Sexually Transmitted Disease (STD) Programs, Atlanta (GA): Centers for Disease Control and Prevention.

- Wingate, L., Schroeter, D. (2007). Evaluation questions checklist for program evaluation. Retrieved from http://wmich.edu/evaluation/checklists

- Shackman, Gene. (2020). What is Program Evaluation? A Beginner's Guide. Retrieved from What is Program Evaluation? A Beginner's Guide

- The Pell Institute for the Study of Opportunity for Higher Education, the Institute for Higher Education Policy and Pathways to College Network. (2024). Evaluation Toolkit. Retrieved from Choose an Evaluation Design « Pell Institute.

- University of Washington I-Tech Fostering Healthier Communities. (2024). Training Evaluation Framework and Tools. Retrieved from Evaluation-Design-and-Methods.pdf (go2itech.org).

- Kidder DP, Fierro L, Luna E, et al. CDC Program Evaluation Framework, 2024. MMWR Recomm Rep. 2024;73(No. RR-6):1-37. doi: http://dx.doi.org/10.15585/mmwr.rr7306a1. Retrieved from https://www.cdc.gov/mmwr/volumes/73/rr/rr7306a1.htm?s_cid=rr7306a1_w