At a glance

Key Findings

- Each year since 1994, the Centers for Disease Control and Prevention (CDC) has collected data to estimate vaccination coverage among U.S. children 19-35 months of age to assess performance of national, state, territorial, and selected local area immunization programs. CDC collects these data through the National Immunization Survey-Child (NIS-Child).

- We check the accuracy of NIS-Child data each year. In 2017, we added a comparison of vaccination coverage by age 19 months for birth cohorts included in both the current and prior year surveys (the "bridging birth cohort"). By restricting analysis to children in this bridging birth cohort, and limiting to vaccinations received before 19 months of age, the vaccination coverage estimates from the current and prior survey years should be the same, other than random differences due to sampling. For this reason, we expected to find no difference in vaccination coverage between the estimates from the prior and current survey years, for children in the bridging birth cohort.

- For several of the vaccinations, we found drops in vaccination coverage by age 19 months from 2015 to 2016 among the bridging birth cohort.

- We evaluated NIS-Child operations and data to assess possible reasons for a drop in coverage among the bridging birth cohort.

- Specifically, we examined respondent (parent and vaccination provider) factors and survey operations factors that could cause a change in survey bias, defined as a change in estimates of vaccination coverage for the same birth cohorts of children due to systematic differences between the 2015 and 2016 surveys.

- Respondent factors included differences in characteristics of parent or provider respondents between the two years. We ruled out participant demographics, geographic location, and type of telephone(s) used as sources of changes in survey bias. We also ruled out provider factors, including the number of providers who responded each year, and how providers submit data (by phone, fax, or mail).

- Operational factors included the manner in which the survey was conducted and data analysis methods. We ruled out survey administration factors, including differences in originating call center or calling rules, and in how the data were entered or processed after they were received, as sources of changes in survey bias. We also ruled out analytical differences in sample construction, weighting procedures, and data cleaning and analysis.

- Investigation of survey respondents and operations did not explain the observed discrepancies among the bridging birth cohort. These observed discrepancies may be due to random fluctuations in survey respondents. We will continue to assess accuracy of NIS-Child estimates each year using the bridging birth cohort comparison and other methods.

- To better understand the impact of these observed discrepancies among the bridging birth cohort on assessing trends, we looked more closely at what is being measured by the difference in estimates from two adjacent years of NIS-Child data. We found that the bridging birth cohort differences in coverage by age 19 months led to small decreases in overall vaccination coverage for some vaccines, even though this part of the overall difference does not measure changes in vaccination coverage over time. This occurred when other components of the overall difference that can reflect changes in vaccination coverage over time showed increases or no difference (e.g., part of the overall difference compares vaccination coverage between younger children from the 2016 survey that did not overlap in birth dates with older children from the 2015 survey). This highlights limitations of using annual estimates to assess changes in performance of immunization programs, particularly when there are signals of possible change in survey accuracy from the bridging birth cohort analysis.

- Given these limitations, we applied a more direct method of assessing trends in vaccination coverage by month and year of birth to NIS-Child data from 2012–2016. This included children born during January 2009 through May 2015.

- By assessing trends by month and year of birth, we found that vaccination coverage is not decreasing over time for most vaccinations. There is no evidence of drops or increases in vaccination coverage by age 24 months over time among the birth cohorts of children included in the 2015 and 2016 NIS-Child (children turning 24 months during January 2014 through January 2017).

- Measuring year-to-year trends in vaccination coverage by birth cohort may be more informative for the NIS-Child community of users than the measure used currently and historically.

Introduction

Since 1994, the Centers for Disease Control and Prevention (CDC) has collected data to estimate vaccination coverage among U.S. children 19-35 months of age through the National Immunization Survey-Child (NIS-Child). CDC uses these data to assess performance of national, territorial, state, and selected local immunization programs and assist with implementation of the Vaccines for Children (VFC) program. CDC publishes coverage estimates in the Morbidity and Mortality Weekly Report and online in ChildVaxView. The NIS-Child uses a random digit-dialing sample of landline and cellular telephone numbers to contact parents or guardians of children aged 19-35 months in the 50 states, the District of Columbia, selected local areas, and U.S. territories. Parents or guardians are interviewed by telephone to collect sociodemographic and health insurance information for all age-eligible children in the household. With consent, a survey is mailed to all vaccination providers identified by the parent/guardian to collect dates and types of all vaccinations administered. Vaccination coverage estimates use only provider-reported vaccination data.

We check quality of NIS-Child data each year. This year, we added an analysis of the current and prior survey year data to assess changes in survey accuracy, including children who were eligible for inclusion in both survey years, the "bridging birth cohort". This approach for assessing changes in accuracy of NIS-Child estimates was developed in 20151 and added to routine data quality checks in 2017. Specifically for the 2015 and 2016 NIS-Child data, we compared estimates of vaccination coverage by age 19 months for children born during January 2013 through May 2014, the bridging birth cohort included in both the current survey year and the prior survey year. Differences in vaccination coverage by age 19 months among this bridging birth cohort could signal a change in survey bias, a systematic difference that could affect validity of vaccination coverage estimates.

We found signals of possible change in survey accuracy from 2015 to 2016, indicated by an unexpected drop in vaccination coverage by age 19 months among the bridging birth cohort for some of the vaccinations examined. We investigated possible reasons for these coverage drops among the bridging birth cohort. We examined a comprehensive set of parent/guardian and provider participant factors and survey operational factors to detect any potential sources for changes in survey bias.

Because changes in survey accuracy could also affect interpretation of trends, we evaluated how the signals among the bridging cohort were related to the differences in vaccination coverage estimates from 2016 and 2015 data that included all survey respondents from each survey year. This led to a more direct assessment of national trends in vaccination coverage by month and year of birth using data from 2012-2016.

The findings from these investigations are described below.

Findings

We conducted national bridging birth cohort analysis for survey years 2015-2016, for each of fifteen vaccination measures. To provide historical perspective, we also conducted this analysis for survey years 2011-2012, 2012-2013, 2013-2014, and 2014-2015. For each pair of survey years compared, analysis was restricted to children in birth cohorts eligible for inclusion in both survey years. For example, children born during January 2013 through May 2014 were eligible for both the 2015 and 2016 surveys, and children born during January 2010 through May 2011 were eligible for both the 2012 and 2013 surveys. For each pair of survey years compared, we estimated vaccination coverage by 19 months (before the day the child reached 19 months of age) within each survey year by month and year of birth. We then computed the average difference of these vaccination coverage estimates within each of the 17 monthly birth cohorts included in the bridging cohort across the two survey years.

Statistically significant decreases in average vaccination coverage among the bridging cohort were observed for six vaccinations from 2011 to 2012 and for nine vaccinations from 2015 to 2016. (Table 1). These decreases ranged from -1.8 (≥3 PCV doses) to -3.8 (combined series) percentage points from 2011 to 2012, and from -2.1 (≥3 DTaP doses) to -4.7 (≥4 PCV doses) percentage points from 2015 to 2016. For comparisons of other years (2012 vs. 2013, 2013 vs. 2014, and 2014 vs. 2015), there were no statistically significant differences.

The results of the bridging birth cohort analysis identified signals of possible change in survey accuracy. CDC and the contractor conducting the NIS-Child, NORC at the University of Chicago (NORC), evaluated factors that could cause changes in survey bias. This included evaluation of differences between 2015 and 2016 in characteristics of the NIS-Child samples, number of vaccination providers reported by parents/guardians, processing of data from household and provider survey phases, final vaccination coverage estimates by type of provider return (completing the Immunization History Questionnaire [IHQ] mailed to providers, or sending a medical record), survey weighting steps and final weights, bridging cohort analysis by child, family, and other characteristics (site of telephone center conducting household interviews, state of residence).

Parent/Guardian Participant Factors

The NIS-Child samples in 2015 and 2016 among the bridging birth cohort were similar, with three statistically significant demographic differences between the two years out of 34 characteristics compared (Table 2).

NORC examined the estimated drop in ≥4 DTaP coverage among the bridging cohort by sociodemographic characteristics (Table 3), by state and selected loca area and by the participant's state of residence (Table 4). The 2015-2016 estimated drop in coverage among the bridging cohort was not confined to any one category or small group of characteristics of children, and was widespread geographically, not confined to any one state or local area.

NORC conducted analyses by source of sample and telephone status of the child's household. Children from the landline sample may be classified as landline-only or dual-user (landline and cell phone), while children in the cell-phone sample may be classified as cell-phone-only or dual-user. The unweighted 2016 cell-phone sample contained over two percentage points more cell-phone-only children than the 2015 cell-phone sample, while the 2016 landline sample contained about one percentage point fewer landline-only children than the 2015 sample. These shifts in the composition of the sample are consistent with the ongoing evolution of the telephone status of American households. There were drops in ≥4 DTaP coverage among the bridging cohort at each level of respondent telephone status (Table 5).

Provider Participant Factors

NORC considered the number of providers nominated in the household phase of the NIS-Child and the number who responded to the provider-record-check phase (Tables 6a, 6b). None of the differences between 2015 and 2016 were statistically significant.

Some nominated providers return vaccination histories by the IHQ, a survey instrument designed for this purpose, while other providers return the data in the form of medical records, which must be transcribed onto IHQs prior to further data processing. Some providers return the information by mail, while others respond by fax. The way providers returned vaccination data (e.g., by mail or by fax) and the type of data returned (e.g., IHQ or medical record) were similar for 2015 and 2016 surveys (Table 7). Drops in ≥4 DTaP coverage among the bridging cohort were present for children with fax returns or mail returns, and with returns of the IHQ or with vaccination medical records (Table 8).

Survey Operations Factors

Operational factors included the manner in which the survey was conducted and data analysis methods.

Telephone interviewing for the household phase of the NIS-Child was conducted in four national telephone centers in 2016. To examine possible effects on data quality, NORC compared estimated vaccination coverage rates for ≥4 DTaP doses by age 19 months for 2015 and 2016 for the bridging cohort by the site at which the interview was completed (Table 9). All sites achieved data of consistent quality in 2015 and 2016.

The vendor providing telephone sample, Marketing Systems Group (MSG), reported there were no changes to sources of systems used to derive the telephone number lists from 2015 to 2016. To verify that there were no changes in procedures that may have caused estimated vaccination coverage to drop, NORC also analyzed the 2015 and 2016 NIS-Child data to check the consistency and accuracy of the survey's data collection and data processing operations.

Vaccination history data for children are reported by their providers on IHQs and in other formats. These raw history data undergo considerable processing, cleaning, and weighting. To test whether data processing mistakes caused 2016 coverage estimates to be lower than 2015 estimates, NORC went back to the original provider data, and computed the total number of DTaP and PCV shots given by age 19 months. The estimated drop in coverage is present even in the original data prior to any processing (Table 10).

NORC manually checked 100 hardcopy IHQs and found no systematic problems in transcription and data entry. While these results are inconclusive due to small sample size, they do not point to systematic errors in manual processing or data entry of IHQs as a cause for the observed declines in coverage.

Finally, NORC conducted several additional technical tests and checks on the quality of the 2015 and 2016 data. Sampling processes were identical across years and so cannot be responsible for the change in vaccination coverage. The calling rules used when an answering machine or a recorded voice-mail message is left did not change across 2015 and 2016 survey years.

NORC concludes from this evaluation that no mistakes or inconsistencies occurred in weighting, and drops in vaccination coverage were revealed in all steps in weighting. Thus, an unexpected weighting effect could not have caused the 2015-2016 observed drop in coverage.

Summary of Findings for Participant and Operations Factors

Investigation of survey participants and operations revealed no reasons to explain the observed discrepancies in vaccination coverage among the bridging cohort. These observed discrepancies may be due to random fluctuations in characteristics of survey respondents.

We did not find a reason for the statistically significant differences in 2015 and 2016 coverage estimates among the bridging birth cohort. However, we were concerned that these differences among the bridging birth cohort could affect interpretation of coverage differences for the full 2015 and 2016 samples.

To show how the bridging birth cohort analysis related to the difference in annual estimates, we first organized monthly birth cohorts into three groups:

- Older children included only in the first survey year (e.g., for 2015, born January 2012 through December 2012)

- Children included in both survey years—the bridging or overlapping cohort (e.g., for 2015 and 2016, born January 2013 through May 2014)

- Younger children included only in the most recent survey year (e.g., for 2016, born June 2014 through May 2015)

We further split each vaccination coverage estimate into two parts with the same denominator – coverage by age 19 months, and coverage during ages 19 months through age at assessment (maximum 35 months) (Figures 1, 2).

This allowed us to split the overall difference in annual NIS-Child estimates into four parts, with contributions from the non-overlapping and overlapping (bridging) birth cohorts further split by age at vaccination (vaccination by age 19 months and vaccination at or after age 19 months). This shows how each component contributes to the overall difference in vaccination coverage estimates. The contribution from the bridging birth cohort for vaccination by age 19 months does not reflect change in vaccination over time because it compares two independent estimates of the same birth cohorts of children by the same age. The contribution from the non-overlapping birth cohorts for vaccination by age 19 months would reflect changes in vaccination coverage over time (see Appendix for further explanation).

For each of the 15 vaccination measures and for each pair of years compared in the bridging cohort analysis, we showed how the overall estimated difference in vaccination coverage between survey years resulted from differences in estimates among the bridging birth cohort and non-overlapping birth cohorts (Tables 11-15). Of the six vaccinations with statistically significant differences (drops) in overall 2016 estimates (compared to 2015), all six had statistically significant drops from the bridging cohort analysis.

We found that interpretation of differences in adjacent annual NIS-Child estimates is complicated. Further, we found that statistically significant differences among the bridging cohort can contribute to statistically significant differences between overall annual estimates, even when the difference among the non-overlapping birth cohorts indicates no change in coverage over time.

Having identified how possible changes in survey accuracy can worsen existing limitations of the annual NIS-Child estimates, we sought a more direct approach to assess changes in vaccination coverage by birth cohort23456. We estimated linear trends in national vaccination coverage by month and year of birth, using NIS-Child data from 2012–2016.

For each of the fifteen vaccination measures, we estimated national coverage by age 19, 24, and 35 months by month and year of birth. Hepatitis B birth dose and rotavirus vaccination were only assessed by age 19 months. For estimates by 24 and 35 months, we used the Kaplan-Meier method to account for censoring of vaccination status after age 19 months for children assessed for vaccination status before reaching age 24 or 35 months.

Our analysis included estimates of vaccination coverage from two to three survey years for the same monthly birth cohort. We treated the estimates from each survey year as independent samples. In a sensitivity analysis reflecting uncertainty about which estimates were closer to the truth – the 2015 or 2016 estimates – we estimated change in vaccination coverage excluding the 2015 data, and excluding the 2016 data. We also repeated the trend analysis restricted to birth cohorts eligible in 2015 or 2016 (including children born January 2012 through May 2015).

Estimated linear trends in vaccination coverage by age 19 months were assessed by monthly birth cohorts from January 2009 through May 2015. Statistically significant trends were found for eight vaccinations; all showed increases in coverage for children born more recently (Table 16, Figures 3-17).

Estimated linear trends in vaccination coverage by age 24 months were assessed by monthly birth cohorts from January 2009 through January 2015. Statistically significant trends were found for five vaccinations; two reflected decreases and three showed increases (Table 17, Figures 18-30). Restricted to 2015 and 2016 data, there were no statistically significant linear trends over cohorts born January 2012 through January 2015.

Estimated linear trends in vaccination coverage by age 35 months were assessed by monthly birth cohorts from January 2009 through February 2014. Statistically significant trends were found for three vaccinations; all reflected increases (Table 18, Figures 31-43). Restricted to 2015 and 2016 data, there were no statistically significant linear trends over cohorts born January 2012 through February 2014.

Limitations

The findings in this report are subject to several limitations. The bridging cohort analysis conducted for this report provided possible signals of a change in survey bias, but did not attempt to measure directly the level of possible change in bias. A key assumption of the bridging birth cohort analysis is that the true vaccination coverage measured among the bridging cohort across the two survey years is the same. However, the bridging cohort is not a fixed population and will comprise different children in different survey years due to migration and death. Statistically significant signals of possible change in survey bias are difficult to interpret – they could be caused by fluctuation due to random sampling or changes in propensity of households called to participate in the survey, unidentified change in survey operations, or unidentified changes in how providers report vaccinations. When there is a signal of possible change in survey bias among the bridging cohort, we do not know if the estimated vaccination coverage for the most recent survey year is closer to or further from the true proportion vaccinated compared to the estimates from the previous year's sample. If there is a change in bias among the bridging cohort, we do not know if it also applies to the entire annual samples from the 2015 and 2016 survey years.

The analysis of components of difference in adjacent annual coverage estimates was limited to the most recent five years of NIS-Child data, during which vaccination coverage was stable for the majority of vaccinations. The limitations of this approach to evaluating change in immunization program performance may differ if evaluated for earlier years of the survey dating back to 1995.

The trend analysis by birth cohort assumed that estimates for a given monthly birth cohort from different survey years were independent estimates, measured with the same systematic bias. The trend results were generally robust in secondary analyses that excluded either the 2015 or 2016 data.

All estimates in this report were calculated at the national level only, other than some of the evaluations conducted to identify possible explanations for the signals of change in survey bias. Validity of survey estimates might vary by sociodemographic characteristics or geographic area. Evaluation of components of differences in adjacent annual estimates are expected to apply to population subgroups, though it is possible that the distribution of the sample respondents by age at vaccination assessment and monthly birth cohort could differ across groups. Annual NIS-Child sample size by states, selected local areas, and territories is not sufficient to analyze trends by month and year of birth at the sub-national level.

Conclusions

During routine annual data quality checks, we identified possible changes in survey accuracy among children in the bridging birth cohorts included in both the 2015 and 2016 NIS-Child. Investigation of survey operations and participant factors revealed no reasons to explain the observed discrepancies among the bridging cohorts. We found similar drops in coverage among the bridging cohort from 2011 to 2012, when the NIS-Child sample increased the proportion of the sample that came from the cellular telephone sample frame from 11% to 50%7. No signals of change in survey accuracy were found for comparisons from 2012 through 2015. We hypothesize that the observed discrepancies from 2015 to 2016 may be due to random fluctuations in characteristics of the survey respondents that we could not measure. We will continue to assess accuracy of NIS-Child estimates each year.

Further investigation identified limitations of the traditional comparison of annual estimates for assessing changes in immunization program performance. These limitations can worsen when there are statistically significant signals of possible change in survey accuracy from one survey year to the next. When we assessed trends in coverage more directly by month and year of birth, we found stable coverage over the births included in the 2015 and 2016 NIS-Child data. We conclude that the observed decreases in coverage from 2015 to 2016 in the traditional annual estimates likely do not provide evidence of decreases in vaccination coverage over time.

Both the traditional comparison of annual estimates and the birth cohort analysis generally indicate that childhood vaccination coverage is high and stable, with improvements needed for some vaccinations. The observed increases or decreases found from either approach were small differences of one to two percentage points.

Our detailed analyses revealed the potential to use birth cohort analysis to assess year-to-year trends in vaccination coverage. This alternative approach may be more informative for the NIS-Child community of users than the annual estimate measure used currently and historically.

APPENDIX: Evaluation of Differences in Vaccination Coverage Estimates from One Survey Year to the Next

To better understand how to interpret the difference in vaccination coverage estimates from one survey year estimate compared to the prior year estimate, we started by expressing an annual NIS-Child estimate as weighted average of the estimates for each monthly birth cohort included in the survey year. We then used this expression to write the equation for the difference in two adjacent annual estimates. Across the two survey years, we organized monthly birth cohorts into three groups: 1) older children included only in the 1st survey year (e.g., for 2015, born January 2012 through December 2012); 2) children included in both survey years – the bridging or overlapping cohort (e.g., for 2015 and 2016, born January 2013 through May 2014); and 3) younger children included only in the most recent year (e.g., for 2016, born June 2014 through May 2015). To show how the bridging cohort analysis relates to the difference in annual estimates, we further split each vaccination coverage estimate into two parts with the same denominator – coverage by age 19 months, and coverage during ages 19 months through age at assessment (maximum 35 months). We computed the weighted distribution of each monthly birth cohort from 2015 and from 2016, and the average age at assessment of vaccination status for each monthly birth cohort for each survey year.

We used the equation for the difference of two adjacent annual estimates to break out the overall estimated difference into components. We split the overall difference into contributions from the non-overlapping and overlapping (bridging) cohorts, and further split by age of vaccination (vaccination by age 19 months and vaccination at or after age 19 months). From this analysis, we can see how each component contributes to the overall difference in vaccination coverage estimates. We conducted this analysis for each vaccination outcome for each pair of years compared in the bridging cohort analysis.

Figure 1 shows the monthly birth cohorts represented in the 2015 and 2016 NIS-Child, and the ages at vaccination assessment for each monthly birth cohort. Children born in 2012 are only represented in the 2015 data, while children born June 2014 through May 2015 are only represented in the 2016 data. Children born January 2013 through May 2014 are represented in both survey years (the bridging cohort). Each monthly birth cohort is represented in a survey year for varying numbers of months of vaccination assessment, at different ages at assessment. For example, children born in April 2013 are included in the 2015 data during 13 months, and assessed at ages 21-33 months. This cohort of children is also included in the 2016 data during three months and assessed at ages 33-35 months.

We can express a vaccination coverage estimate from an annual NIS-Child data year as a weighted average of estimates for each monthly birth cohort. The average month and year of birth for the 2016 data was March 2014 (March 2013 for 2015 data) (Figure 2). The average age at vaccination assessment in 2015 and 2016 was 28.5 months.

Taken together, data from the 2015 and 2016 survey years include children born across 41 monthly birth cohorts. Defining the January 2012 cohort as cohort 1, the older children only surveyed in 2015 will be monthly cohorts 1-12, the bridging cohort will be monthly cohorts 13-29, and the younger children surveyed only in 2016 will be monthly cohorts 30-41.

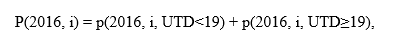

Now we can break the annual estimates of vaccination coverage from 2015 and 2016, defined as P2015 and P2016, respectively, into sums across the monthly birth cohorts:

where w(2015, i) and w(2016, i) are the proportions of the total sum of the provider weights per survey year falling in monthly birth cohort i, and p(2015, i) and p(2016, i) are weighted estimates for each survey year of the proportions of children up-to-date (UTD) on the vaccination of interest for monthly birth cohort i.

Now, using equations 1 and 2, the difference between 2015 and 2016 coverage estimate is:

The overall difference can be split into two parts, the first comparing the non-overlapping birth cohorts of younger children from 2016 (monthly birth cohorts i = 30 to 41) with the older children from 2015 (i = 1 to 12), and the second among the bridging cohort born in between the younger and older cohorts (i = 13 to 29). The non-overlapping birth cohorts were born 18 to 40 months apart (January – December 2012 from 2015 data vs. June 2014 – May 2015 from 2016 data). About 62% of the combined 2015 and 2016 survey weights is included in the bridging cohort part of the overall difference in annual estimates (Figure 2).

The weighted distribution of an annual sample by monthly birth cohort under-represents the oldest and youngest children (Figure 2). This occurs because monthly birth cohorts are eligible for varying numbers of months in the sample, and the NIS-Child survey weights are calibrated to the estimated population of children aged 19-35 months at mid-year of data collection, and not to the population in each monthly birth cohort. When estimates from the 2015 and 2016 data years are compared, the older of the children included only in the 2015 survey are under-represented, and the younger of the children included only in the 2016 survey are under-represented. Among the bridging cohort, the 2015 survey weights the older children more, while the 2016 survey weights the younger children more (Figure 2). Thus, if there are trends in vaccination coverage by monthly birth cohort, the difference of two adjacent annual NIS-Child estimates may provide a biased summary measure of change compared to an estimate for which monthly birth cohorts from each survey year are correctly weighted to their population distribution.

The varying ages at vaccination assessment across monthly birth cohorts could also lead to biased estimation of change compared to an estimate that assesses all children at the same milestone age. From equation 3 and Figure 2, the part of the difference between overall 2016 and 2015 estimates for the non-overlapping birth cohorts compares the younger children from 2016, assessed at average ages 19-25 months, with the older children from 2015, assessed at average ages 30-35 months. Thus, if there were no change in vaccination coverage between these cohorts of children, and vaccinations were received during ages 19-35 months, there could be a negative contribution from the non-overlapping birth cohort part of the overall difference. This would not be an issue for the birth dose of HepB or rotavirus vaccination, which are not administered after 19 months, and more of an issue for vaccinations with higher uptake during ages 19-35 months (e.g., ≥2 HepA doses).

Within the bridging cohort, the children from the 2016 data are assessed on average six to ten months later than children from the 2015 data (Figure 2). Thus, this part of the overall difference in adjacent annual estimates measures "catch-up" vaccination during ages 19-35 months. Although the same birth cohorts of children are represented in the bridging cohort from the 2015 and 2016 surveys, they are from independent random samples, and baseline coverage by age 19 months from 2015 and 2016 could differ by chance or for systematic reasons. Thus, the estimate of "catch-up" vaccination among the bridging cohort may be biased compared to an estimate that followed the same children over time. If the baseline vaccination coverage among the bridging birth cohort is similar for 2015 and 2016, we would expect a positive contribution to the overall difference in adjacent annual estimates.

To relate equation 3 for the difference in two adjacent annual estimates with the bridging cohort analysis described in the previous section, we can further break out the non-overlapping and bridging cohort parts by age at vaccination.

Here, we define where the vaccination coverage estimate for monthly birth cohort i from the 2016 data year, p(2016, i), is the sum of the coverage based on vaccinations receive before reaching age 19 months, and coverage based on vaccinations received at or after age 19 months.

Using similar definitions for the 2015 data, we can break out the overall difference in 2016 and 2015 coverage estimates into four parts:

The first two parts are differences between 2015 and 2016 estimates for the non-overlapping cohorts, for coverage by age 19 months and at or after 19 months, respectively. The last two parts are differences among the bridging cohort, for coverage by age 19 months and at or after 19 months, respectively.

The first part for the bridging cohort is not measuring change in vaccination coverage; it compares estimates of coverage by age 19 months in the same birth cohorts measured independently in two different survey years. If there are no systematic changes in the survey, and the true vaccination coverage did not change among the actual populations eligible for the 2015 and 2016 surveys born in the range of the bridging cohort, we would expect this part of the overall difference to be zero. The weights by monthly birth cohort are skewed in the opposite direction, toward the younger cohorts for 2016 and older cohorts for 2015. Thus, this part would provide a biased estimate of the bias of the survey, compared to an estimate that correctly weighted each monthly birth cohort to the actual population counts. As a further consequence of this skewed weighting, if estimated coverage was increasing or decreasing across the monthly birth cohorts within the bridging cohort, that would be reflected in this part of the overall difference.

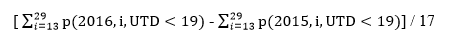

In the bridging cohort analysis, we used as the signal of possible change in bias of the survey from 2015 to 2016. This is the same as the first part of the bridging cohort component of the overall difference, under the assumption that all monthly birth cohorts are weighted equally within and across the two survey years.

With the conceptual framework for understanding the difference in two adjacent annual estimates established, we calculated the overall difference between 2015 and 2016 estimates of vaccination coverage and broke this overall difference into the four components from equation 4 (Table 11). Similar estimates were calculated for comparisons between adjacent survey years from 2011 through 2015 (Tables 12-15).

Overall, there were six vaccinations with statistically significant differences from 2015 to 2016, all with lower estimates in 2016 compared to 2015. The statistically significant decreases ranged from 1.3 percentage points for ≥3 DTaP doses to 2.3 percentage points for ≥4 PCV doses (Table 11). For each of these vaccinations, there was also a statistically significant decrease from 2015 to 2016 among the bridging cohort for coverage by age 19 months. There were three other vaccinations with statistically significant decreases in coverage by age 19 months among the bridging cohort, which were offset by larger increases in coverage during 19-35 months among the bridging cohort: ≥4 DTaP doses, the full series of Haemophilus influenza type b vaccine (Hib) , and the combined seven-vaccine series (children who received ≥4 DTaP doses, ≥3 doses of poliovirus vaccine, ≥1 dose of measles-containing vaccine, the full Hib series, ≥3 HepB doses, ≥ 1 dose of varicella vaccine, and ≥4 PCV doses).

Among the bridging cohort, coverage during 19-35 months was statistically significantly higher in 2016 compared to 2015 for all vaccinations except rotavirus and the HepB birth dose. The largest differences were for ≥2 HepA doses (17.5 percentage points) and ≥4 DTaP doses (6.7 percentage points). This pattern was generally observed for the other comparisons of estimates from adjacent years (Tables 12-15). The contribution of the bridging cohort to the overall differences tended to be positive, except when there was a statistically significant decrease in coverage by age 19 months. For the HepB birth dose and rotavirus vaccination, the contribution from the bridging cohort could be positive or negative, primarily reflecting the difference in coverage by age 19 months. As expected, the overall differences were closer to the differences for the bridging cohort compared to the non-overlapping cohort.

For the non-overlapping cohorts, coverage during 19-35 months was statistically significantly lower for the younger cohorts from 2016 compared to the older cohorts from 2015, except for rotavirus vaccination and the HepB birth dose. The largest differences were for ≥2 HepA doses (27.2 percentage points) and ≥4 DTaP doses (11.5 percentage points). The contributions of the non-overlapping cohort to the overall differences from 2015 to 2016 were negative for all vaccinations except rotavirus. In some other years compared, there was a positive contribution for the HepB birth dose, a statistically significant increase from 2011 to 2012. These patterns were also generally observed for other comparisons from adjacent years (Tables 12-15).

From 2011 to 2012, HepB birth dose increased 3.0 percentage points due to an increase in the non-overlapping cohort (Table 12). Coverage decreased for six vaccinations overall, by 1.1 percentage points for poliovirus vaccination and ≥3 DTaP doses to 2.5 percentage points for ≥4 PCV doses. The contribution of coverage by age 19 months among the bridging cohort was negative for all six of these vaccinations, and the differences were statistically significant for three.

There were few statistically significant differences in overall vaccination coverage comparing estimates from 2012 to 2013, 2013 to 2014, and 2014 to 2015 (Tables 13-15). Exceptions included increases from 2012 to 2013 of 2.6 percentage points for the HepB birth dose and of 4.0 percentage points for rotavirus vaccination (Table 13), and increases from 2013 to 2014 of 2.0 and 2.9 percentage points for ≥1 and ≥2 HepA doses, respectively (Table 14).

Authors

James A. Singleton, PhD, David Yankey, MS, Holly A. Hill, MD, PHD, Zhen Zhao, PhD, Laurie Elam-Evans, PhD, Immunization Services Division, National Center for Immunization and Respiratory Diseases, Centers for Disease Control and Prevention

Benjamin Fredua, MS, Leidos

Kirk Wolter, PhD, Benjamin Skalland, MS, NORC at the University of Chicago

The authors would like to acknowledge Mary Ann K. Hall, MPH, Cherokee Nation Assurance, and Statcie Greby, DVM, MPH, Immunization Services Division, National Center for Immunization and Respiratory Diseases, Centers for Disease Control and Prevention, for their assistance in preparing this report.

- Yankey D, Hill HA, Elam-Evans LD, Khare M, Singleton JA, Pineau V, Wolter K. Estimating change in telephone survey bias in an era of declining response rates and transition to wireless telephones – evidence from the National Immunization Survey (NIS), 1995-2013. Presented at the American Association of Public Opinion Research (AAPOR) 70th Annual Conference, May 15, 2015, Hollywood, CA.

- Smith PJ, Nuorti JP, Singleton JA, Zhao Z, Wolter KM. Effect of vaccine shortages on timeliness of pneumococcal conjugate vaccination: results from the 2001_2005 National Immunization Survey. Pediatrics 2007;120:e1165-e1173.

- Nuorti JP, Martin SW, Smith PJ, Moran JS, Schwartz B. Uptake of pneumococcal conjugate vaccine among children in the 1998–2002 United States birth cohorts. Am J Prev Med 2008;34:46-53.

- Smith PJ, Stevenson J. Racial/ethnic disparities in vaccination coverage by 19 months of age: an evaluation of the impact of missing data resulting from record scattering. Statistics in Medicine. 2008; 27:4107-4118.

- Smith PJ, Jain N, Stevenson J, Männikkö N, Molinari NA. Progress in timely vaccination coverage among children living in low-income households. Arch Pediatr Adolesc Med 2009;163:462-468.

- Zhao Z, Murphy TV, Jacques-Carroll L. Progress in newborn hepatitis B vaccination by birth year cohorts—1998–2007, USA. Vaccine 2011;30:14-20.

- Black CL, Yankey D, Kolasa M. National, state and local area vaccination coverage among children aged 19-35 months – United States, 2012. MMWR 2013;62:733-740.