|

|

|

|

|

|

|

| ||||||||||

|

|

|

|

|

|

|

||||

| ||||||||||

|

|

|

|

|

Persons using assistive technology might not be able to fully access information in this file. For assistance, please send e-mail to: mmwrq@cdc.gov. Type 508 Accommodation and the title of the report in the subject line of e-mail. Framework for Program Evaluation in Public HealthFOREWORD Health improvement is what public health professionals strive to achieve. To reach this goal, we must devote our skill -- and our will -- to evaluating the effects of public health actions. As the targets of public health actions have expanded beyond infectious diseases to include chronic diseases, violence, emerging pathogens, threats of bioterrorism, and the social contexts that influence health disparities, the task of evaluation has become more complex. CDC developed the framework for program evaluation to ensure that amidst the complex transition in public health, we will remain accountable and committed to achieving measurable health outcomes. By integrating the principles of this framework into all CDC program operations, we will stimulate innovation toward outcome improvement and be better positioned to detect program effects. More efficient and timely detection of these effects will enhance our ability to translate findings into practice. Guided by the steps and standards in the framework, our basic approach to program planning will also evolve. Findings from prevention research will lead to program plans that are clearer and more logical; stronger partnerships will allow collaborators to focus on achieving common goals; integrated information systems will support more systematic measurement; and lessons learned from evaluations will be used more effectively to guide changes in public health strategies. Publication of this framework also emphasizes CDC's continuing commitment to improving overall community health. Because categorical strategies cannot succeed in isolation, public health professionals working across program areas must collaborate in evaluating their combined influence on health in the community. Only then will we be able to realize and demonstrate the success of our vision -- healthy people in a healthy world through prevention.

Jeffrey P. Koplan, M.D., M.P.H. The following CDC staff members prepared this report: Robert L. Milstein, M.P.H. Scott F. Wetterhall, M.D., M.P.H. in collaboration with CDC Evaluation Working Group Members Gregory M. Christenson, Ph.D. Jeffrey R. Harris, M.D. Nancy F. Pegg, M.B.A. Janet L. Collins, Ph.D., M.S. Diane O. Dunet, M.P.A. Aliki A. Pappas, M.P.H., M.S.W. Alison E. Kelly, M.P.I.A. Paul J. Placek, Ph.D. Michael Hennessy, Ph.D., M.P.H. Deborah L. Rugg, Ph.D. April J. Bell, M.P.H. Thomas A. Bartenfeld, III, Ph.D. Roger H. Bernier, Ph.D., M.P.H. Max R. Lum, Ed.D. Galen E. Cole, Ph.D., M.P.H. Kathy Cahill, M.P.H. Hope S. King, M.S. Eunice R. Rosner, Ed.D., M.S. William Kassler, M.D. Joyce J. Neal, Ph.D., M.P.H. Additional CDC Contributors Office of the Director: Lynda S. Doll, Ph.D., M.A.; Charles W. Gollmar; Richard A. Goodman, M.D., M.P.H.; Wilma G. Johnson, M.S.P.H.; Marguerite Pappaioanou, D.V.M., Ph.D., M.P.V.M.; David J. Sencer, M.D., M.P.H. (Retired); Dixie E. Snider, M.D., M.P.H.; Marjorie A. Speers, Ph.D.; Lisa R. Tylor; and Kelly O’Brien Yehl, M.P.A. (Washington, D.C.). Agency for Toxic Substances and Disease Registry: Peter J. McCumiskey and Tim L. Tinker, Dr.P.H., M.P.H. Epidemiology Program Office: Jeanne L. Alongi, M.P.H. (Public Health Prevention Service); Peter A. Briss, M.D.; Andrew L. Dannenberg, M.D., M.P.H.; Daniel B. Fishbein, M.D.; Dennis F. Jarvis, M.P.H.; Mark L. Messonnier, Ph.D., M.S; Bradford A. Myers; Raul A. Romaguera, D.M.D., M.P.H.; Steven B. Thacker, M.D., M.Sc.; Benedict I. Truman, M.D., M.P.H.; Katherine R. Turner, M.P.H. (Public Health Prevention Service); Jennifer L. Wiley, M.H.S.E. (Public Health Prevention Service); G. David Williamson, Ph.D.; and Stephanie Zaza, M.D., M.P.H. National Center for Chronic Disease Prevention and Health Promotion: Cynthia M. Jorgensen, Dr.P.H.; Marshall W. Kreuter, Ph.D., M.P.H.; R. Brick Lancaster, M.A.; Imani Ma’at, Ed.D., Ed.M., M.C.P.; Elizabeth Majestic, M.S., M.P.H.; David V. McQueen, Sc.D., M.A.; Diane M. Narkunas, M.P.H.; Dearell R. Niemeyer, M.P.H.; and Lori B. de Ravello, M.P.H. National Center for Environmental Health: Jami L. Fraze, M.S.Ed.; Joan L. Morrissey; William C. Parra, M.S.; Judith R. Qualters, Ph.D.; Michael J. Sage, M.P.H.; Joseph B. Smith; and Ronald R. Stoddard. National Center for Health Statistics: Marjorie S. Greenberg, M.A. and Jennfier H. Madans, Ph.D. National Center for HIV, STD, and TB Prevention: Huey-Tsyh Chen, Ph.D.; Janet C. Cleveland, M.S.; Holly J. Dixon; Janice P. Hiland, M.A.; Richard A. Jenkins, Ph.D.; Jill K. Leslie; Mark N. Lobato, M.D.; Kathleen M. MacQueen, Ph.D., M.P.H.; and Noreen L. Qualls, Dr.P.H., M.S.P.H. National Center for Injury Prevention and Control: Christine M. Branche, Ph.D.; Linda L. Dahlberg, Ph.D.; and David A. Sleet, Ph.D., M.A. National Immunization Program: Susan Y. Chu, Ph.D., M.S.P.H. and Lance E. Rodewald, M.D. National Institute for Occupational Safety and Health: Linda M. Goldenhar, Ph.D. and Travis Kubale, M.S.W. Public Health Practice Program Office: Patricia Drehobl, M.P.H.; Michael T. Hatcher, M.P.H.; Cheryl L Scott, M.D., M.P.H.; Catherine B. Shoemaker, M.Ed.; Brian K. Siegmund, M.S.Ed., M.S.; and Pomeroy Sinnock, Ph.D. Consultants and Contributors Framework for Program Evaluation in Public HealthSummary Effective program evaluation is a systematic way to improve and account for public health actions by involving procedures that are useful, feasible, ethical, and accurate. The framework guides public health professionals in their use of program evaluation. It is a practical, nonprescriptive tool, designed to summarize and organize essential elements of program evaluation. The framework comprises steps in program evaluation practice and standards for effective program evaluation. Adhering to the steps and standards of this framework will allow an understanding of each program's context and will improve how program evaluations are conceived and conducted. Furthermore, the framework encourages an approach to evaluation that is integrated with routine program operations. The emphasis is on practical, ongoing evaluation strategies that involve all program stakeholders, not just evaluation experts. Understanding and applying the elements of this framework can be a driving force for planning effective public health strategies, improving existing programs, and demonstrating the results of resource investments. INTRODUCTION Program evaluation is an essential organizational practice in public health (1); however, it is not practiced consistently across program areas, nor is it sufficiently well-integrated into the day-to-day management of most programs. Program evaluation is also necessary for fulfilling CDC's operating principles for guiding public health activities, which include a) using science as a basis for decision-making and public health action; b) expanding the quest for social equity through public health action; c) performing effectively as a service agency; d) making efforts outcome- oriented; and e) being accountable (2). These operating principles imply several ways to improve how public health activities are planned and managed. They underscore the need for programs to develop clear plans, inclusive partnerships, and feedback systems that allow learning and ongoing improvement to occur. One way to ensure that new and existing programs honor these principles is for each program to conduct routine, practical evaluations that provide information for management and improve program effectiveness. This report presents a framework for understanding program evaluation and facilitating integration of evaluation throughout the public health system. The purposes of this report are to

BACKGROUND Evaluation has been defined as systematic investigation of the merit, worth, or significance of an object (3,4). During the past three decades, the practice of evaluation has evolved as a discipline with new definitions, methods, approaches, and applications to diverse subjects and settings (4-7). Despite these refinements, a basic organizational framework for program evaluation in public health practice had not been developed. In May 1997, the CDC Director and executive staff recognized the need for such a framework and the need to combine evaluation with program management. Further, the need for evaluation studies that demonstrate the relationship between program activities and prevention effectiveness was emphasized. CDC convened an Evaluation Working Group, charged with developing a framework that summarizes and organizes the basic elements of program evaluation. Procedures for Developing the Framework The Evaluation Working Group, with representatives from throughout CDC and in collaboration with state and local health officials, sought input from eight reference groups during its year-long information-gathering phase. Contributors included

In February 1998, the Working Group sponsored the Workshop To Develop a Framework for Evaluation in Public Health Practice. Approximately 90 representatives participated. In addition, the working group conducted interviews with approximately 250 persons, reviewed published and unpublished evaluation reports, consulted with stakeholders of various programs to apply the framework, and maintained a website to disseminate documents and receive comments. In October 1998, a national distance-learning course featuring the framework was also conducted through CDC's Public Health Training Network (8). The audience included approximately 10,000 professionals. These information-sharing strategies provided the working group numerous opportunities for testing and refining the framework with public health practitioners. Defining Key Concepts Throughout this report, the term program is used to describe the object of evaluation, which could be any organized public health action. This definition is deliberately broad because the framework can be applied to almost any organized public health activity, including direct service interventions, community mobilization efforts, research initiatives, surveillance systems, policy development activities, outbreak investigations, laboratory diagnostics, communication campaigns, infrastructure-building projects, training and educational services, and administrative systems. The additional terms defined in this report were chosen to establish a common evaluation vocabulary for public health professionals. Integrating Evaluation with Routine Program Practice Evaluation can be tied to routine program operations when the emphasis is on practical, ongoing evaluation that involves all program staff and stakeholders, not just evaluation experts. The practice of evaluation complements program management by gathering necessary information for improving and accounting for program effectiveness. Public health professionals routinely have used evaluation processes when answering questions from concerned persons, consulting partners, making judgments based on feedback, and refining program operations (9). These evaluation processes, though informal, are adequate for ongoing program assessment to guide small changes in program functions and objectives. However, when the stakes of potential decisions or program changes increase (e.g., when deciding what services to offer in a national health promotion program), employing evaluation procedures that are explicit, formal, and justifiable becomes important (10). ASSIGNING VALUE TO PROGRAM ACTIVITIES Questions regarding values, in contrast with those regarding facts, generally involve three interrelated issues: merit (i.e., quality), worth (i.e., cost-effectiveness), and significance (i.e., importance) (3). If a program is judged to be of merit, other questions might arise regarding whether the program is worth its cost. Also, questions can arise regarding whether even valuable programs contribute important differences. Assigning value and making judgments regarding a program on the basis of evidence requires answering the following questions (3,4,11):

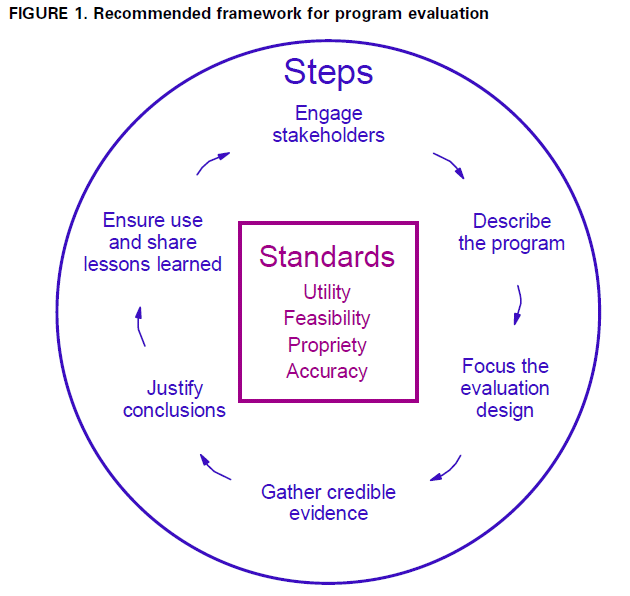

These questions should be addressed at the beginning of a program and revisited throughout its implementation. The framework described in this report provides a systematic approach for answering these questions. FRAMEWORK FOR PROGRAM EVALUATION IN PUBLIC HEALTH Effective program evaluation is a systematic way to improve and account for public health actions by involving procedures that are useful, feasible, ethical, and accurate. The recommended framework was developed to guide public health professionals in using program evaluation. It is a practical, nonprescriptive tool, designed to summarize and organize the essential elements of program evaluation. The framework comprises steps in evaluation practice and standards for effective evaluation (Figure 1). The framework is composed of six steps that must be taken in any evaluation. They are starting points for tailoring an evaluation to a particular public health effort at a particular time. Because the steps are all interdependent, they might be encountered in a nonlinear sequence; however, an order exists for fulfilling each -- earlier steps provide the foundation for subsequent progress. Thus, decisions regarding how to execute a step are iterative and should not be finalized until previous steps have been thoroughly addressed. The steps are as follows: Step 1: Engage stakeholders. Adhering to these six steps will facilitate an understanding of a program's context (e.g., the program's history, setting, and organization) and will improve how most evaluations are conceived and conducted. The second element of the framework is a set of 30 standards for assessing the quality of evaluation activities, organized into the following four groups: Standard 1: utility, These standards, adopted from the Joint Committee on Standards for Educational Evaluation (12),* answer the question, "Will this evaluation be effective?" and are recommended as criteria for judging the quality of program evaluation efforts in public health. The remainder of this report discusses each step, its subpoints, and the standards that govern effective program evaluation (Box 1). Steps in Program Evaluation Step 1: Engaging Stakeholders The evaluation cycle begins by engaging stakeholders (i.e., the persons or organizations having an investment in what will be learned from an evaluation and what will be done with the knowledge). Public health work involves partnerships; therefore, any assessment of a public health program requires considering the value systems of the partners. Stakeholders must be engaged in the inquiry to ensure that their perspectives are understood. When stakeholders are not engaged, an evaluation might not address important elements of a program's objectives, operations, and outcomes. Therefore, evaluation findings might be ignored, criticized, or resisted because the evaluation did not address the stakeholders' concerns or values (12). After becoming involved, stakeholders help to execute the other steps. Identifying and engaging the following three principal groups of stakeholders are critical:

Those Involved in Program Operations. Persons or organizations involved in program operations have a stake in how evaluation activities are conducted because the program might be altered as a result of what is learned. Although staff, funding officials, and partners work together on a program, they are not necessarily a single interest group. Subgroups might hold different perspectives and follow alternative agendas; furthermore, because these stakeholders have a professional role in the program, they might perceive program evaluation as an effort to judge them personally. Program evaluation is related to but must be distinguished from personnel evaluation, which operates under different standards (13). Those Served or Affected by the Program. Persons or organizations affected by the program, either directly (e.g., by receiving services) or indirectly (e.g., by benefitting from enhanced community assets), should be identified and engaged in the evaluation to the extent possible. Although engaging supporters of a program is natural, individuals who are openly skeptical or antagonistic toward the program also might be important stakeholders to engage. Opposition to a program might stem from differing values regarding what change is needed or how to achieve it. Opening an evaluation to opposing perspectives and enlisting the help of program opponents in the inquiry might be prudent because these efforts can strengthen the evaluation's credibility. Primary Users of the Evaluation. Primary users of the evaluation are the specific persons who are in a position to do or decide something regarding the program. In practice, primary users will be a subset of all stakeholders identified. A successful evaluation will designate primary users early in its development and maintain frequent interaction with them so that the evaluation addresses their values and satisfies their unique information needs (7). The scope and level of stakeholder involvement will vary for each program evaluation. Various activities reflect the requirement to engage stakeholders (Box 2) (14). For example, stakeholders can be directly involved in designing and conducting the evaluation. Also, they can be kept informed regarding progress of the evaluation through periodic meetings, reports, and other means of communication. Sharing power and resolving conflicts helps avoid overemphasis of values held by any specific stakeholder (15). Occasionally, stakeholders might be inclined to use their involvement in an evaluation to sabotage, distort, or discredit the program. Trust among stakeholders is essential; therefore, caution is required for preventing misuse of the evaluation process. Step 2: Describing the Program Program descriptions convey the mission and objectives of the program being evaluated. Descriptions should be sufficiently detailed to ensure understanding of program goals and strategies. The description should discuss the program's capacity to effect change, its stage of development, and how it fits into the larger organization and community. Program descriptions set the frame of reference for all subsequent decisions in an evaluation. The description enables comparisons with similar programs and facilitates attempts to connect program components to their effects (12). Moreover, stakeholders might have differing ideas regarding program goals and purposes. Evaluations done without agreement on the program definition are likely to be of limited use. Sometimes, negotiating with stakeholders to formulate a clear and logical description will bring benefits before data are available to evaluate program effectiveness (7). Aspects to include in a program description are need, expected effects, activities, resources, stage of development, context, and logic model. Need. A statement of need describes the problem or opportunity that the program addresses and implies how the program will respond. Important features for describing a program's need include a) the nature and magnitude of the problem or opportunity, b) which populations are affected, c) whether the need is changing, and d) in what manner the need is changing. Expected Effects. Descriptions of expectations convey what the program must accomplish to be considered successful (i.e., program effects). For most programs, the effects unfold over time; therefore, the descriptions of expectations should be organized by time, ranging from specific (i.e., immediate) to broad (i.e., long-term) consequences. A program's mission, goals, and objectives all represent varying levels of specificity regarding a program's expectations. Also, forethought should be given to anticipate potential unintended consequences of the program. Activities. Describing program activities (i.e., what the program does to effect change) permits specific steps, strategies, or actions to be arrayed in logical sequence. This demonstrates how each program activity relates to another and clarifies the program's hypothesized mechanism or theory of change (16,17). Also, program activity descriptions should distinguish the activities that are the direct responsibility of the program from those that are conducted by related programs or partners (18). External factors that might affect the program's success (e.g., secular trends in the community) should also be noted. Resources. Resources include the time, talent, technology, equipment, information, money, and other assets available to conduct program activities. Program resource descriptions should convey the amount and intensity of program services and highlight situations where a mismatch exists between desired activities and resources available to execute those activities. In addition, economic evaluations require an understanding of all direct and indirect program inputs and costs (19-21). Stage of Development. Public health programs mature and change over time; therefore, a program's stage of development reflects its maturity. Programs that have recently received initial authorization and funding will differ from those that have been operating continuously for a decade. The changing maturity of program practice should be considered during the evaluation process (22). A minimum of three stages of development must be recognized: planning, implementation, and effects. During planning, program activities are untested, and the goal of evaluation is to refine plans. During implementation, program activities are being field-tested and modified; the goal of evaluation is to characterize real, as opposed to ideal, program activities and to improve operations, perhaps by revising plans. During the last stage, enough time has passed for the program's effects to emerge; the goal of evaluation is to identify and account for both intended and unintended effects. Context. Descriptions of the program's context should include the setting and environmental influences (e.g., history, geography, politics, social and economic conditions, and efforts of related or competing organizations) within which the program operates (6). Understanding these environmental influences is required to design a context-sensitive evaluation and aid users in interpreting findings accurately and assessing the generalizability of the findings. Logic Model. A logic model describes the sequence of events for bringing about change by synthesizing the main program elements into a picture of how the program is supposed to work (23-35). Often, this model is displayed in a flow chart, map, or table to portray the sequence of steps leading to program results (Figure 2). One of the virtues of a logic model is its ability to summarize the program's overall mechanism of change by linking processes (e.g., laboratory diagnosis of disease) to eventual effects (e.g., reduced tuberculosis incidence). The logic model can also display the infrastructure needed to support program operations. Elements that are connected within a logic model might vary but generally include inputs (e.g., trained staff), activities (e.g., identification of cases), outputs (e.g., persons completing treatment), and results ranging from immediate (e.g., curing affected persons) to intermediate (e.g., reduction in tuberculosis rate) to long-term effects (e.g., improvement of population health status). Creating a logic model allows stakeholders to clarify the program's strategies; therefore, the logic model improves and focuses program direction. It also reveals assumptions concerning conditions for program effectiveness and provides a frame of reference for one or more evaluations of the program. A detailed logic model can also strengthen claims of causality and be a basis for estimating the program's effect on endpoints that are not directly measured but are linked in a causal chain supported by prior research (35). Families of logic models can be created to display a program at different levels of detail, from different perspectives, or for different audiences. Program descriptions will vary for each evaluation, and various activities reflect the requirement to describe the program (e.g., using multiple sources of information to construct a well-rounded description) (Box 3). The accuracy of a program description can be confirmed by consulting with diverse stakeholders, and reported descriptions of program practice can be checked against direct observation of activities in the field. A narrow program description can be improved by addressing such factors as staff turnover, inadequate resources, political pressures, or strong community participation that might affect program performance. Step 3: Focusing the Evaluation Design The evaluation must be focused to assess the issues of greatest concern to stakeholders while using time and resources as efficiently as possible (7,36,37). Not all design options are equally well-suited to meeting the information needs of stakeholders. After data collection begins, changing procedures might be difficult or impossible, even if better methods become obvious. A thorough plan anticipates intended uses and creates an evaluation strategy with the greatest chance of being useful, feasible, ethical, and accurate. Among the items to consider when focusing an evaluation are purpose, users, uses, questions, methods, and agreements. Purpose. Articulating an evaluation's purpose (i.e., intent) will prevent premature decision-making regarding how the evaluation should be conducted. Characteristics of the program, particularly its stage of development and context, will influence the evaluation's purpose. Public health evaluations have four general purposes. (Box 4). The first is to gain insight, which happens, for example, when assessing the feasibility of an innovative approach to practice. Knowledge from such an evaluation provides information concerning the practicality of a new approach, which can be used to design a program that will be tested for its effectiveness. For a developing program, information from prior evaluations can provide the necessary insight to clarify how its activities should be designed to bring about expected changes. A second purpose for program evaluation is to change practice, which is appropriate in the implementation stage when an established program seeks to describe what it has done and to what extent. Such information can be used to better describe program processes, to improve how the program operates, and to fine-tune the overall program strategy. Evaluations done for this purpose include efforts to improve the quality, effectiveness, or efficiency of program activities. A third purpose for evaluation is to assess effects. Evaluations done for this purpose examine the relationship between program activities and observed consequences. This type of evaluation is appropriate for mature programs that can define what interventions were delivered to what proportion of the target population. Knowing where to find potential effects can ensure that significant consequences are not overlooked. One set of effects might arise from a direct cause-and-effect relationship to the program. Where these exist, evidence can be found to attribute the effects exclusively to the program. In addition, effects might arise from a causal process involving issues of contribution as well as attribution. For example, if a program's activities are aligned with those of other programs operating in the same setting, certain effects (e.g., the creation of new laws or policies) cannot be attributed solely to one program or another. In such situations, the goal for evaluation is to gather credible evidence that describes each program's contribution in the combined change effort. Establishing accountability for program results is predicated on an ability to conduct evaluations that assess both of these kinds of effects. A fourth purpose, which applies at any stage of program development, involves using the process of evaluation inquiry to affect those who participate in the inquiry. The logic and systematic reflection required of stakeholders who participate in an evaluation can be a catalyst for self-directed change. An evaluation can be initiated with the intent of generating a positive influence on stakeholders. Such influences might be to supplement the program intervention (e.g., using a follow-up questionnaire to reinforce program messages); empower program participants (e.g., increasing a client's sense of control over program direction); promote staff development (e.g., teaching staff how to collect, analyze, and interpret evidence); contribute to organizational growth (e.g., clarifying how the program relates to the organization's mission); or facilitate social transformation (e.g., advancing a community's struggle for self-determination) (7,38-42). Users. Users are the specific persons that will receive evaluation findings. Because intended users directly experience the consequences of inevitable design trade-offs, they should participate in choosing the evaluation focus (7). User involvement is required for clarifying intended uses, prioritizing questions and methods, and preventing the evaluation from becoming misguided or irrelevant. Uses. Uses are the specific ways in which information generated from the evaluation will be applied. Several uses exist for program evaluation (Box 4). Stating uses in vague terms that appeal to many stakeholders increases the chances the evaluation will not fully address anyone's needs. Uses should be planned and prioritized with input from stakeholders and with regard for the program's stage of development and current context. All uses must be linked to one or more specific users. Questions. Questions establish boundaries for the evaluation by stating what aspects of the program will be addressed (5-7). Creating evaluation questions encourages stakeholders to reveal what they believe the evaluation should answer. Negotiating and prioritizing questions among stakeholders further refines a viable focus. The question-development phase also might expose differing stakeholder opinions regarding the best unit of analysis. Certain stakeholders might want to study how programs operate together as a system of interventions to effect change within a community. Other stakeholders might have questions concerning the performance of a single program or a local project within a program. Still others might want to concentrate on specific subcomponents or processes of a project. Clear decisions regarding the questions and corresponding units of analysis are needed in subsequent steps of the evaluation to guide method selection and evidence gathering. Methods. The methods for an evaluation are drawn from scientific research options, particularly those developed in the social, behavioral, and health sciences (5-7,43-48). A classification of design types includes experimental, quasi-experimental, and observational designs (43,48). No design is better than another under all circumstances. Evaluation methods should be selected to provide the appropriate infor- mation to address stakeholders' questions (i.e., methods should be matched to the primary users, uses, and questions). Experimental designs use random assignment to compare the effect of an intervention with otherwise equivalent groups (49). Quasi-experimental methods compare nonequivalent groups (e.g., program participants versus those on a waiting list) or use multiple waves of data to set up a comparison (e.g., interrupted time series) (50,51). Observational methods use comparisons within a group to explain unique features of its members (e.g., comparative case studies or cross-sectional surveys) (45,52-54). The choice of design has implications for what will count as evidence, how that evidence will be gathered, and what kind of claims can be made (including the internal and external validity of conclusions) (55). Also, methodologic decisions clarify how the evaluation will operate (e.g., to what extent program participants will be involved; how information sources will be selected; what data collection instruments will be used; who will collect the data; what data management systems will be needed; and what are the appropriate methods of analysis, synthesis, interpretation, and presentation). Because each method option has its own bias and limitations, evaluations that mix methods are generally more effective (44,56-58). During the course of an evaluation, methods might need to be revised or modified. Also, circumstances that make a particular approach credible and useful can change. For example, the evaluation's intended use can shift from improving a program's current activities to determining whether to expand program services to a new population group. Thus, changing conditions might require alteration or iterative redesign of methods to keep the evaluation on track (22). Agreements. Agreements summarize the procedures and clarify roles and responsibilities among those who will execute the evaluation plan (6,12). Agreements describe how the evaluation plan will be implemented by using available resources (e.g., money, personnel, time, and information) (36,37). Agreements also state what safeguards are in place to protect human subjects and, where appropriate, what ethical (e.g., institutional review board) or administrative (e.g., paperwork reduction) approvals have been obtained (59,60). Elements of an agreement include statements concerning the intended purpose, users, uses, questions, and methods, as well as a summary of the deliverables, time line, and budget. The agreement can include all engaged stakeholders but, at a minimum, it must involve the primary users, any providers of financial or in-kind resources, and those persons who will conduct the evaluation and facilitate its use and dissemination. The formality of an agreement might vary depending on existing stakeholder relationships. An agreement might be a legal contract, a detailed protocol, or a memorandum of understanding. Creating an explicit agreement verifies the mutual understanding needed for a successful evaluation. It also provides a basis for modifying or renegotiating procedures if necessary. Various activities reflect the requirement to focus the evaluation design (Box 5). Both supporters and skeptics of the program could be consulted to ensure that the proposed evaluation questions are politically viable (i.e., responsive to the varied positions of interest groups). A menu of potential evaluation uses appropriate for the program's stage of development and context could be circulated among stakeholders to determine which is most compelling. Interviews could be held with specific intended users to better understand their information needs and time line for action. Resource requirements could be reduced when users are willing to employ more timely but less precise evaluation methods. Step 4: Gathering Credible Evidence An evaluation should strive to collect information that will convey a well-rounded picture of the program so that the information is seen as credible by the evaluation's primary users. Information (i.e., evidence) should be perceived by stakeholders as believable and relevant for answering their questions. Such decisions depend on the evaluation questions being posed and the motives for asking them. For certain questions, a stakeholder's standard for credibility might require having the results of a controlled experiment; whereas for another question, a set of systematic observations (e.g., interactions between an outreach worker and community residents) would be the most credible. Consulting specialists in evaluation methodology might be necessary in situations where concern for data quality is high or where serious consequences exist associated with making errors of inference (i.e., concluding that program effects exist when none do, concluding that no program effects exist when in fact they do, or attributing effects to a program that has not been adequately implemented) (61,62). Having credible evidence strengthens evaluation judgments and the recommendations that follow from them. Although all types of data have limitations, an evalu- ation's overall credibility can be improved by using multiple procedures for gathering, analyzing, and interpreting data. Encouraging participation by stakeholders can also enhance perceived credibility. When stakeholders are involved in defining and gathering data that they find credible, they will be more likely to accept the evaluation's conclusions and to act on its recommendations (7,38). Aspects of evidence gathering that typically affect perceptions of credibility include indicators, sources, quality, quantity, and logistics. Indicators. Indicators define the program attributes that pertain to the evaluation's focus and questions (63-66). Because indicators translate general concepts regarding the program, its context, and its expected effects into specific measures that can be interpreted, they provide a basis for collecting evidence that is valid and reliable for the evaluation's intended uses. Indicators address criteria that will be used to judge the program; therefore, indicators reflect aspects of the program that are meaningful for monitoring (66-70). Examples of indicators that can be defined and tracked include measures of program activities (e.g., the program's capacity to deliver services; the participation rate; levels of client satisfaction; the efficiency of resource use; and the amount of intervention exposure) and measures of program effects (e.g., changes in participant behavior, community norms, policies or practices, health status, quality of life, and the settings or environment around the program). Defining too many indicators can detract from the evaluation's goals; however, multiple indicators are needed for tracking the implementation and effects of a program. One approach to developing multiple indicators is based on the program logic model (developed in the second step of the evaluation). The logic model can be used as a template to define a spectrum of indicators leading from program activities to expected effects (23,29-35). For each step in the model, qualitative/quantitative indicators could be developed to suit the concept in question, the information available, and the planned data uses. Relating indicators to the logic model allows the detection of small changes in performance faster than if a single outcome were the only measure used. Lines of responsibility and accountability are also clarified through this approach because the measures are aligned with each step of the program strategy. Further, this approach results in a set of broad-based measures that reveal how health outcomes are the consequence of intermediate effects of the program. Intangible factors (e.g., service quality, community capacity [71], or interorganizational relations) that also affect the program can be measured by systematically recording markers of what is said or done when the concept is expressed (72,73). During an evaluation, indicators might need to be modified or new ones adopted. Measuring program performance by tracking indicators is only part of an evaluation and must not be confused as a singular basis for decision-making. Well-documented problems result from using performance indicators as a substitute for completing the evaluation process and reaching fully justified conclusions (66,67,74). An indicator (e.g., a rising rate of disease) might be assumed to reflect a failing program when, in reality, the indicator might be influenced by changing conditions that are beyond the program's control. Sources. Sources of evidence in an evaluation are the persons, documents, or observations that provide information for the inquiry (Box 6). More than one source might be used to gather evidence for each indicator to be measured. Selecting multiple sources provides an opportunity to include different perspectives regarding the program and thus enhances the evaluation's credibility. An inside perspective might be understood from internal documents and comments from staff or program managers, whereas clients, neutral observers, or those who do not support the program might provide a different, but equally relevant perspective. Mixing these and other perspectives provides a more comprehensive view of the program. The criteria used for selecting sources should be stated clearly so that users and other stakeholders can interpret the evidence accurately and assess if it might be biased (45,75-77). In addition, some sources are narrative in form and others are numeric. The integration of qualitative and quantitative information can increase the chances that the evidence base will be balanced, thereby meeting the needs and expectations of diverse users (43,45,56,57,78-80). Finally, in certain cases, separate evaluations might be selected as sources for conducting a larger synthesis evaluation (58,81,82). Quality. Quality refers to the appropriateness and integrity of information used in an evaluation. High-quality data are reliable, valid, and informative for their intended use. Well-defined indicators enable easier collection of quality data. Other factors affecting quality include instrument design, data-collection procedures, training of data collectors, source selection, coding, data management, and routine error checking. Obtaining quality data will entail trade-offs (e.g., breadth versus depth) that should be negotiated among stakeholders. Because all data have limitations, the intent of a practical evaluation is to strive for a level of quality that meets the stakeholders' threshold for credibility. Quantity. Quantity refers to the amount of evidence gathered in an evaluation. The amount of information required should be estimated in advance, or where evolving processes are used, criteria should be set for deciding when to stop collecting data. Quantity affects the potential confidence level or precision of the evaluation's conclusions. It also partly determines whether the evaluation will have sufficient power to detect effects (83). All evidence collected should have a clear, anticipated use. Correspondingly, only a minimal burden should be placed on respondents for providing information. Logistics. Logistics encompass the methods, timing, and physical infrastructure for gathering and handling evidence. Each technique selected for gathering evidence (Box 7) must be suited to the source(s), analysis plan, and strategy for communicating findings. Persons and organizations also have cultural preferences that dictate acceptable ways of asking questions and collecting information, including who would be perceived as an appropriate person to ask the questions. For example, some participants might be willing to discuss their health behavior with a stranger, whereas others are more at ease with someone they know. The procedures for gathering evidence in an evaluation (Box 8) must be aligned with the cultural conditions in each setting of the project and scrutinized to ensure that the privacy and confidentiality of the information and sources are protected (59,60,84). Step 5: Justifying Conclusions The evaluation conclusions are justified when they are linked to the evidence gathered and judged against agreed-upon values or standards set by the stakeholders. Stakeholders must agree that conclusions are justified before they will use the evaluation results with confidence. Justifying conclusions on the basis of evidence includes standards, analysis and synthesis, interpretation, judgment, and recommendations. Standards. Standards reflect the values held by stakeholders, and those values provide the basis for forming judgments concerning program performance. Using explicit standards distinguishes evaluation from other approaches to strategic management in which priorities are set without reference to explicit values. In practice, when stakeholders articulate and negotiate their values, these become the standards for judging whether a given program's performance will, for example, be considered successful, adequate, or unsuccessful. An array of value systems might serve as sources of norm-referenced or criterion-referenced standards (Box 9). When operationalized, these standards establish a comparison by which the program can be judged (3,7,12). Analysis and Synthesis. Analysis and synthesis of an evaluation's findings might detect patterns in evidence, either by isolating important findings (analysis) or by combining sources of information to reach a larger understanding (synthesis). Mixed method evaluations require the separate analysis of each evidence element and a synthesis of all sources for examining patterns of agreement, convergence, or complexity. Deciphering facts from a body of evidence involves deciding how to organize, classify, interrelate, compare, and display information (7,85-87). These decisions are guided by the questions being asked, the types of data available, and by input from stakeholders and primary users. Interpretation. Interpretation is the effort of figuring out what the findings mean and is part of the overall effort to understand the evidence gathered in an evaluation (88). Uncovering facts regarding a program's performance is not sufficient to draw evaluative conclusions. Evaluation evidence must be interpreted to determine the practical significance of what has been learned. Interpretations draw on information and perspectives that stakeholders bring to the evaluation inquiry and can be strengthened through active participation or interaction. Judgments. Judgments are statements concerning the merit, worth, or significance of the program. They are formed by comparing the findings and interpretations regarding the program against one or more selected standards. Because multiple standards can be applied to a given program, stakeholders might reach different or even conflicting judgments. For example, a program that increases its outreach by 10% from the previous year might be judged positively by program managers who are using the standard of improved performance over time. However, community members might feel that despite improvements, a minimum threshold of access to services has not been reached. Therefore, by using the standard of social equity, their judgment concerning program performance would be negative. Conflicting claims regarding a program's quality, value, or importance often indicate that stakeholders are using different standards for judgment. In the context of an evaluation, such disagreement can be a catalyst for clarifying relevant values and for negotiating the appropriate bases on which the program should be judged. Recommendations. Recommendations are actions for consideration resulting from the evaluation. Forming recommendations is a distinct element of program evaluation that requires information beyond what is necessary to form judgments regarding program performance (3). Knowing that a program is able to reduce the risk of disease does not translate necessarily into a recommendation to continue the effort, particularly when competing priorities or other effective alternatives exist. Thus, recommendations for continuing, expanding, redesigning, or terminating a program are separate from judgments regarding a program's effectiveness. Making recommendations requires information concerning the context, particularly the organizational context, in which programmatic decisions will be made (89). Recommendations that lack sufficient evidence or those that are not aligned with stakeholders' values can undermine an evaluation's credibility. By contrast, an evaluation can be strengthened by recommendations that anticipate the political sensitivities of intended users and highlight areas that users can control or influence (7). Sharing draft recommendations, soliciting reactions from multiple stakeholders, and presenting options instead of directive advice increase the likelihood that recommendations will be relevant and well-received. Various activities fulfill the requirement for justifying conclusions in an evaluation (Box 10). Conclusions could be strengthened by a) summarizing the plausible mechanisms of change; b) delineating the temporal sequence between activities and effects; c) searching for alternative explanations and showing why they are unsupported by the evidence; and d) showing that the effects can be repeated. When different but equally well-supported conclusions exist, each could be presented with a summary of its strengths and weaknesses. Creative techniques (e.g., the Delphi process**) could be used to establish consensus among stakeholders when assigning value judgments (90). Techniques for analyzing, synthesizing, and interpreting findings should be agreed on before data collection begins to ensure that all necessary evidence will be available. Step 6: Ensuring Use and Sharing Lessons Learned Lessons learned in the course of an evaluation do not automatically translate into informed decision-making and appropriate action. Deliberate effort is needed to ensure that the evaluation processes and findings are used and disseminated appropriately. Preparing for use involves strategic thinking and continued vigilance, both of which begin in the earliest stages of stakeholder engagement and continue throughout the evaluation process. Five elements are critical for ensuring use of an evaluation, including design, preparation, feedback, follow-up, and dissemination. Design. Design refers to how the evaluation's questions, methods, and overall processes are constructed. As discussed in the third step of this framework, the design should be organized from the start to achieve intended uses by primary users. Having a clear design that is focused on use helps persons who will conduct the evaluation to know precisely who will do what with the findings and who will benefit from being a part of the evaluation. Furthermore, the process of creating a clear design will highlight ways that stakeholders, through their contributions, can enhance the relevance, credibility, and overall utility of the evaluation. Preparation. Preparation refers to the steps taken to rehearse eventual use of the evaluation findings. The ability to translate new knowledge into appropriate action is a skill that can be strengthened through practice. Building this skill can itself be a useful benefit of the evaluation (38,39,91). Rehearsing how potential findings (particularly negative findings) might affect decision-making will prepare stakeholders for eventually using the evidence (92). Primary users and other stakeholders could be given a set of hypothetical results and asked to explain what decisions or actions they would make on the basis of this new knowledge. If they indicate that the evidence presented is incomplete and that no action would be taken, this is a sign that the planned evaluation should be modified. Preparing for use also gives stakeholders time to explore positive and negative implications of potential results and time to identify options for program improvement. Feedback. Feedback is the communication that occurs among all parties to the evaluation. Giving and receiving feedback creates an atmosphere of trust among stakeholders; it keeps an evaluation on track by letting those involved stay informed regarding how the evaluation is proceeding. Primary users and other stakeholders have a right to comment on decisions that might affect the likelihood of obtaining useful information. Stakeholder feedback is an integral part of evaluation, particularly for ensuring use. Obtaining feedback can be encouraged by holding periodic discussions during each step of the evaluation process and routinely sharing interim findings, provisional interpretations, and draft reports. Follow-Up. Follow-up refers to the technical and emotional support that users need during the evaluation and after they receive evaluation findings. Because of the effort required, reaching justified conclusions in an evaluation can seem like an end in itself; however, active follow-up might be necessary to remind intended users of their planned use. Follow-up might also be required to prevent lessons learned from becoming lost or ignored in the process of making complex or politically sensitive decisions. To guard against such oversight, someone involved in the evaluation should serve as an advocate for the evaluation's findings during the decision-making phase. This type of advocacy increases appreciation of what was discovered and what actions are consistent with the findings. Facilitating use of evaluation findings also carries with it the responsibility for preventing misuse (7,12,74,93,94). Evaluation results are always bound by the context in which the evaluation was conducted. However, certain stakeholders might be tempted to take results out of context or to use them for purposes other than those agreed on. For instance, inappropriately generalizing the results from a single case study to make decisions that affect all sites in a national program would constitute misuse of the case study evaluation. Similarly, stakeholders seeking to undermine a program might misuse results by overemphasizing negative findings without giving regard to the program's positive attributes. Active follow-up might help prevent these and other forms of misuse by ensuring that evidence is not misinterpreted and is not applied to questions other than those that were the central focus of the evaluation. Dissemination. Dissemination is the process of communicating either the procedures or the lessons learned from an evaluation to relevant audiences in a timely, unbiased, and consistent fashion. Although documentation of the evaluation is needed, a formal evaluation report is not always the best or even a necessary product. Like other elements of the evaluation, the reporting strategy should be discussed in advance with intended users and other stakeholders. Such consultation ensures that the information needs of relevant audiences will be met. Planning effective communication also requires considering the timing, style, tone, message source, vehicle, and format of information products. Regardless of how communications are constructed, the goal for dissemination is to achieve full disclosure and impartial reporting. A checklist of items to consider when developing evaluation reports includes tailoring the report content for the audience, explaining the focus of the evaluation and its limitations, and listing both the strengths and weaknesses of the evaluation (Box 11) (6). Additional Uses. Additional uses for evaluation flow from the process of conducting the evaluation; these process uses have value and should be encouraged because they complement the uses of the evaluation findings (Box 12) (7,38,93,94). Those persons who participate in an evaluation can experience profound changes in thinking and behavior. In particular, when newcomers to evaluation begin to think as evaluators, fundamental shifts in perspective can occur. Evaluation prompts staff to clarify their understanding of program goals. This greater clarity allows staff to function cohesively as a team, focused on a common end (95). Immersion in the logic, reasoning, and values of evaluation can lead to lasting impacts (e.g., basing decisions on systematic judgments instead of on unfounded assumptions) (7). Additional process uses for evaluation includes defining indicators to discover what matters to decision makers and making outcomes matter by changing the structural reinforcements connected with outcome attainment (e.g., by paying outcome dividends to programs that save money through their prevention efforts) (96). The benefits that arise from these and other process uses provide further rationale for initiating evaluation activities at the beginning of a program. Standards for Effective Evaluation Public health professionals will recognize that the basic steps of the framework for program evaluation are part of their routine work. In day-to-day public health practice, stakeholders are consulted; program goals are defined; guiding questions are stated; data are collected, analyzed, and interpreted; judgments are formed; and lessons are shared. Although informal evaluation occurs through routine practice, standards exist to assess whether a set of evaluative activities are well-designed and working to their potential. The Joint Committee on Standards for Educational Evaluation has developed program evaluation standards for this purpose (12). These standards, designed to assess evaluations of educational programs, are also relevant for public health programs. The program evaluation standards make conducting sound and fair evaluations practical. The standards provide practical guidelines to follow when having to decide among evaluation options. The standards help avoid creating an imbalanced evaluation (e.g., one that is accurate and feasible but not useful or one that would be useful and accurate but is infeasible). Furthermore, the standards can be applied while planning an evaluation and throughout its implementation. The Joint Committee is unequivocal in that, "the standards are guiding principles, not mechanical rules. . . . In the end, whether a given standard has been addressed adequately in a particular situation is a matter of judgment" (12). In the Joint Committee's report, standards are grouped into the following four categories and include a total of 30 specific standards (Boxes 13-16). As described in the report, each category has an associated list of guidelines and common errors, illustrated with applied case examples:

Standard 1: Utility Utility standards ensure that information needs of evaluation users are satisfied. Seven utility standards (Box 13) address such items as identifying those who will be impacted by the evaluation, the amount and type of information collected, the values used in interpreting evaluation findings, and the clarity and timeliness of evaluation reports. Standard 2: Feasibility Feasibility standards ensure that the evaluation is viable and pragmatic. The three feasibility standards (Box 14) emphasize that the evaluation should employ practical, nondisruptive procedures; that the differing political interests of those involved should be anticipated and acknowledged; and that the use of resources in conducting the evaluation should be prudent and produce valuable findings. Standard 3: Propriety Propriety standards ensure that the evaluation is ethical (i.e., conducted with regard for the rights and interests of those involved and effected). Eight propriety standards (Box 15) address such items as developing protocols and other agreements for guiding the evaluation; protecting the welfare of human subjects; weighing and disclosing findings in a complete and balanced fashion; and addressing any conflicts of interest in an open and fair manner. Standard 4: Accuracy Accuracy standards ensure that the evaluation produces findings that are considered correct. Twelve accuracy standards (Box 16) include such items as describing the program and its context; articulating in detail the purpose and methods of the evaluation; employing systematic procedures to gather valid and reliable information; applying appropriate qualitative or quantitative methods during analysis and synthesis; and producing impartial reports containing conclusions that are justified. The steps and standards are used together throughout the evaluation process. For each step, a subset of relevant standards should be considered (Box 17). APPLYING THE FRAMEWORK Conducting Optimal Evaluations Public health professionals can no longer question whether to evaluate their programs; instead, the appropriate questions are

The framework for program evaluation helps answer these questions by guiding its users in selecting evaluation strategies that are useful, feasible, ethical, and accurate. To use the recommended framework in a specific program context requires practice, which builds skill in both the science and art of program evaluation. When applying the framework, the challenge is to devise an optimal -- as opposed to an ideal -- strategy. An optimal strategy is one that accomplishes each step in the framework in a way that accommodates the program context and meets or exceeds all relevant standards. CDC's evaluations of human immunodeficiency virus prevention efforts, including school-based programs, provide examples of optimal strategies for national-, state-, and local-level evaluation (97,98). Assembling an Evaluation Team Harnessing and focusing the efforts of a collaborative group is one approach to conducting an optimal evaluation (24,25). A team approach can succeed when a small group of carefully selected persons decides what the evaluation must accomplish and pools resources to implement the plan. Stakeholders might have varying levels of involvement on the team that correspond to their own perspectives, skills, and concerns. A leader must be designated to coordinate the team and maintain continuity throughout the process; thereafter, the steps in evaluation practice guide the selection of team members. For example,

All organizations, even those that are able to find evaluation team members within their own agency, should collaborate with partners and take advantage of community resources when assembling an evaluation team. This strategy increases the available resources and enhances the evaluation's credibility. Furthermore, a diverse team of engaged stakeholders has a greater probability of conducting a culturally competent evaluation (i.e., one that understands and is sensitive to the persons, conditions, and contexts associated with the program) (99,100). Although challenging for the coordinator and the participants, the collaborative approach is practical because of the benefits it brings (e.g., reduces suspicion and fear, increases awareness and commitment, increases the possibility of achieving objectives, broadens knowledge base, teaches evaluation skills, strengthens partnerships, increases the possibility that findings will be used, and allows for differing perspectives) (8,24). Addressing Common Concerns Common concerns regarding program evaluation are clarified by using this framework. Evaluations might not be undertaken because they are misperceived as having to be costly. However, the expense of an evaluation is relative; the cost depends on the questions being asked and the level of precision desired for the answers. A simple, low-cost evaluation can deliver valuable results. Rather than discounting evaluations as time-consuming and tangential to program operations (e.g., left to the end of a program's project period), the framework encourages conducting evaluations from the beginning that are timed strategically to provide necessary feedback to guide action. This makes integrating evaluation with program practice possible. Another concern centers on the perceived technical demands of designing and conducting an evaluation. Although circumstances exist where controlled environments and elaborate analytic techniques are needed, most public health program evaluations do not require such methods. Instead, the practical approach endorsed by this framework focuses on questions that will improve the program by using context-sensitive methods and analytic techniques that summarize accurately the meaning of qualitative and quantitative information. Finally, the prospect of evaluation troubles some program staff because they perceive evaluation methods as punitive, exclusionary, or adversarial. The framework encourages an evaluation approach that is designed to be helpful and engages all interested stakeholders in a process that welcomes their participation. Sanctions to be applied, if any, should not result from discovering negative findings, but from failing to use the learning to change for greater effectiveness (10). EVALUATION TRENDS Interest in program improvement and accountability continues to grow in government, private, and nonprofit sectors. The Government Performance and Results Act requires federal agencies to set performance goals and to measure annual results. Nonprofit donor organizations (e.g., United Way) have integrated evaluation into their program activities and now require that grant recipients measure program outcomes (30). Public-health-oriented foundations (e.g., W.K. Kellogg Foundation) have also begun to emphasize the role of evaluation in their programming (24). Innovative approaches to staffing program evaluations have also emerged. For example, the American Cancer Society (ACS) Collaborative Evaluation Fellows Project links students and faculty in 17 schools of public health with the ACS national and regional offices to evaluate local cancer control programs (101). These activities across public and private sectors reflect a collective investment in building evaluation capacity for improving performance and being accountable for achieving public health results. Investments in evaluation capacity are made to improve program quality and effectiveness. One of the best examples of the beneficial effects of conducting evaluations is the Malcolm Baldridge National Quality Award Program (102).*** Evidence demonstrates that the evaluative processes required to win the Baldridge Award have helped American businesses outperform their competitors (103). Now these same effects on quality and performance are being translated to the health and human service sector. Recently, Baldridge Award criteria were developed for judging the excellence of health care organizations (104). This extension to the health-care industry illustrates the critical role for evaluation in achieving health and human service objectives. Likewise, the framework for program evaluation was developed to help integrate evaluation into the corporate culture of public health and fulfill CDC's operating principles for public health practice (1,2). Building evaluation capacity throughout the public health workforce is a goal also shared by the Public Health Functions Steering Committee. Chaired by the U.S. Surgeon General, this committee identified core competencies for evaluation as essential for the public health workforce of the twenty first century (105). With its focus on making evaluation accessible to all program staff and stakeholders, the framework helps to promote evaluation literacy and competency among all public health professionals. SUMMARY Evaluation is the only way to separate programs that promote health and prevent injury, disease, or disability from those that do not; it is a driving force for planning effective public health strategies, improving existing programs, and demonstrating the results of resource investments. Evaluation also focuses attention on the common purpose of public health programs and asks whether the magnitude of investment matches the tasks to be accomplished (95). The recommended framework is both a synthesis of existing evaluation practices and a standard for further improvement. It supports a practical approach to evaluation that is based on steps and standards applicable in public health settings. Because the framework is purposefully general, it provides a guide for designing and conducting specific evaluation projects across many different program areas. In addition, the framework can be used as a template to create or enhance program-specific evaluation guidelines that further operationalize the steps and standards in ways that are appropriate for each program (20,96,106-112). Thus, the recommended framework is one of several tools that CDC can use with its partners to improve and account for their health promotion and disease or injury prevention work. ADDITIONAL INFORMATION Sources of additional information are available for those who wish to begin applying the framework presented in this report or who wish to enhance their under- standing of program evaluation. In particular, the following resources are recommended:

References

* The program evaluation standards are an approved standard by the American National Standards Institute (ANSI) and have been endorsed by the American Evaluation Association and 14 other professional organizations (ANSI Standard No. JSEE-PR 1994, Approved March 15, 1994). ** Developed by the Rand Corporation, the Delphi process is an iterative method for arriving at a consensus concerning an issue or problem by circulating questions and responses to a panel of qualified reviewers whose identities are usually not revealed to one another. The questions and responses are progressively refined with each round until a viable option or solution is reached. *** The Malcolm Baldridge National Quality Improvement Act of 1987 (Public Law 100-107) established a public-private partnership focused on encouraging American business and other organizations to practice effective quality management. The annual award process, which involves external review as well as self-assessment against Criteria for Performance Excellence, provides a proven course for organizations to improve significantly the quality of their goods and services. Box 1 BOX 1. Steps in evaluation practice and standards for effective evaluation Steps in Evaluation Practice

Standards for Effective Evaluation

Return to top. Figure 1  Return to top. Box 2 BOX 2. Engaging stakeholders

Return to top. Figure 2  Return to top. Box 3 BOX 3. Describing the program

Return to top. Box 4 BOX 4. Selected uses for evaluation in public health practice by category of purpose Gain insight

Return to top. Box 5 BOX 5. Focusing the evaluation design

Adapted from a) Joint Committee on Standards for Educational Evaluation. Program evaluation standards: how to assess evaluations of educational programs. 2nd ed. Thousand Oaks, CA: Sage Publications, 1994; and b) U.S. General Accounting Office. Designing evaluations. Washington, DC: U.S. General Accounting Office, 1991; publication no. GAO/PEMD-10.1.4. Return to top. Box 6 BOX 6. Selected sources of evidence for an evaluation PersonsReturn to top. Box 7 BOX 7. Selected techniques for gathering evidence

Return to top. Box 8 BOX 8. Gathering credible evidence

Return to top. Box 9 BOX 9. Selected sources of standards for judging program performance

Return to top. Box 10 BOX 10. Justifying conclusions

Return to top. Box 11 BOX 11. Checklist for ensuring effective evaluation reports

Return to top. Box 12 BOX 12. Ensuring use and sharing lessons learned

Return to top. Box 13 BOX 13. Utility standards The following utility standards ensure that an evaluation will serve the information needs of intended users:

Return to top. Box 14 BOX 14. Feasibility standards The following feasibility standards ensure that an evaluation will be realistic, prudent, diplomatic, and frugal:

Return to top. Box 15 BOX 15. Propriety standards The following propriety standards ensure that an evaluation will be conducted legally, ethically, and with regard for the welfare of those involved in the evaluation as well as those affected by its results:

Return to top. Box 16 BOX 16. Accuracy standards The following accuracy standards ensure that an evaluation will convey technically adequate information regarding the determining features of merit of the program:

Return to top. Box 17 BOX 17. Cross-reference of steps and relevant standards

All MMWR HTML versions of articles are electronic conversions from ASCII text into HTML. This conversion may have resulted in character translation or format errors in the HTML version. Users should not rely on this HTML document, but are referred to the electronic PDF version and/or the original MMWR paper copy for the official text, figures, and tables. An original paper copy of this issue can be obtained from the Superintendent of Documents, U.S. Government Printing Office (GPO), Washington, DC 20402-9371; telephone: (202) 512-1800. Contact GPO for current prices. **Questions or messages regarding errors in formatting should be addressed to mmwrq@cdc.gov.Page converted: 9/8/1999

|

|||||||||||||||||||||||

This page last reviewed 5/2/01

|